From User Insights to Actionable Metrics: A User-Focused

Evaluation of Privacy-Preserving Browser Extensions

Ritik Roongta

New York University

Brooklyn, USA

Rachel Greenstadt

New York University

Brooklyn, USA

ABSTRACT

The rapid growth of web tracking via advertisements has led to

an increased adoption of privacy-preserving browser extensions.

These extensions are crucial for blocking trackers and enhancing

the overall web browsing experience. The advertising industry

is constantly changing, leading to ongoing development and im-

provements in both new and existing ad-blocking and anti-tracking

extensions. Despite this, there is a lack of comprehensive studies

exploring the set of user concerns associated with these extensions.

Our research addresses this gap by identifying ve user concerns

and establishing a privacy and usability topics framework, specic

to privacy-preserving extensions.

Also, many of these user concerns have not been extensively

studied in the prior works. Therefore, we conducted an extensive

literature review to identify shortcomings in the current benchmark-

ing methodology. This led to the development of new techniques,

including experiments to measure newly identied metrics. Our

study reveals eight new metrics for privacy-preserving exten-

sions that have not been previously measured. Additionally, our

study enhances the measurement methodology for two metrics,

ensuring precise results. We focus particularly on metrics that users

commonly encounter on the web and report in Chrome web store

reviews. Our goal is to serve as a foundational reference for future

research in this eld.

CCS CONCEPTS

• Security and privacy

→

Human and societal aspects of se-

curity and privacy; Usability in security and privacy; Privacy

protections;

KEYWORDS

Web Measurement, Privacy, AdBlockers, User Concerns, Metrics,

Filterlists

ACM Reference Format:

Ritik Roongta and Rachel Greenstadt. 2024. From User Insights to Action-

able Metrics: A User-Focused Evaluation of Privacy-Preserving Browser

Permission to make digital or hard copies of all or part of this work for personal or

classroom use is granted without fee provided that copies are not made or distributed

for prot or commercial advantage and that copies bear this notice and the full citation

on the rst page. Copyrights for components of this work owned by others than the

author(s) must be honored. Abstracting with credit is permitted. To copy otherwise, or

republish, to post on servers or to redistribute to lists, requires prior specic permission

and/or a fee. Request permissions from [email protected].

ASIA CCS ’24, July 1–5, 2024, Singapore, Singapore

© 2024 Copyright held by the owner/author(s). Publication rights licensed to ACM.

ACM ISBN 979-8-4007-0482-6/24/07.. . $15.00

https://doi.org/10.1145/3634737.3657028

Extensions. In ACM Asia Conference on Computer and Communications Se-

curity (ASIA CCS ’24), July 1–5, 2024, Singapore, Singapore. ACM, New York,

NY, USA, 17 pages. https://doi.org/10.1145/3634737.3657028

1 INTRODUCTION

The web has become a key part of our daily lives, especially post

CoVID-19. As of 2023, the average daily time spent online has

surpassed six hours [

16

]. Reports from the World Bank and the

National Institute of Health have highlighted the steep rise in e-

commerce [

11

], health [

14

], and banking [

9

] trac. Greater internet

usage of these services leads to increased exposure of Personally

Identiable Information (PII). Previous research [

45

,

50

,

56

,

82

]

has shown that tracking through advertisements is not only in-

trusive but also deteriorates the overall web browsing experience

for users. To safeguard themselves from increased web tracking

and enhance their web browsing experience, users have embraced

privacy-preserving extensions for their web browsers, many of

which operate by blocking ads or trackers. As reported in the re-

cent Pagefair report [

8

], the number of users blocking ads on the

internet exceeded 820 million in 2022. Another study found that

22.2% of all users and 30% of Chrome users were using AdBlock

Plus [

72

]. Furthermore, the FBI recommended consumers and busi-

nesses use ad-blockers in a 2022 advisory [10].

Privacy-preserving extensions typically function by implement-

ing various features that protect against tracking, data collection,

and privacy-invasive practices used by websites and advertisers.

Apart from being popular, the space of privacy-preserving exten-

sions is highly competitive. There are more than four privacy-

preserving extensions with over 10 million downloads on the Chrome

Web Store alone. This number rises substantially for extensions hav-

ing over 100k downloads, indicating that there is no single widely

accepted extension.

Using browser extensions comes with a few challenges. First,

these extensions operate with the same privileges as the browser

itself, creating new avenues for exploitation [

58

]. Second, they

consume signicant computational resources as they continuously

check for intrusive ads and trackers. Third, extensions often need to

communicate with various third parties to sync data, potentially in-

troducing new channels for data leaks [

12

]. Lastly, while attempting

to block ads, an extension may inadvertently break the functionality

of a website, posing a usability trade-o for users who still need

access to the desired content [

67

]. Users and their advocates face a

fog of uncertainty about the impact that using privacy-preserving

extensions and ad-blockers will have on their experience and how

much of the dierences, such as broken layouts, are intrinsic to

the technologies or based on a dierential treatment by sites and

services.

ASIA CCS ’24, July 1–5, 2024, Singapore, Singapore Roongta et al.

Table 1: List of popular privacy-preserving extensions showcasing the diversity of the domains covered. Extensions have been

categorized based on their descriptions on the web store and public perception. The popular abbreviations for each extension,

developer information, number of reviews, release year, and blocking strategy for each extension are tabulated.

Category Extension Abbr. Developer No. of Reviews Release year Blocking strategy

Ad-Blockers &

Privacy

Protection

AdBlock Plus ABP Eyeo GmbH 18156 2011 Filterlist

UBlock Origin UbO Raymond Hill 9896 2014 Filterlist

Adguard AdG Adguard Software Ltd 5610 2013 Filterlist

Ghostery - Ghostery GmbH 3364 2012 Filterlist

Privacy

Protection

Privacy Badger PB Electronic Frontier Foundation 542 2014 Filterlist

Decentraleyes - Thomas Rientjes 107 2017 CDN list

Disconnect - Casey Oppenheim 648 2011 Filterlist

The rapid expansion of the advertising industry has led to in-

creasingly aggressive ad tracking and targeting techniques [

24

,

70

].

To counter it, there has been a continuous enhancement of ad-

blocking extensions. However, our preliminary study highlighted

user dissatisfaction with these tools where 31% of all reviews on

the Chrome web store mention at least one critical aspect of these

extensions. This indicates a gap in extension developers’ under-

standing of user needs. Additionally, many extensions fail to meet

all user requirements. Therefore, it’s crucial to gain a deeper in-

sight into user concerns and identify the shortcomings in existing

evaluation methods. This will enable future developers to create

eective and user-centric ad-blocking extensions.

Our work addresses the following research questions:

•

RQ1: What are the various user concerns around privacy-

preserving browser extensions?

•

RQ2: What are the unidentied metrics in the usability and

privacy benchmarking methodologies of these extensions?

•

RQ3: How to measure the novel metrics to provide a ex-

haustive benchmark for evaluation of the extensions?

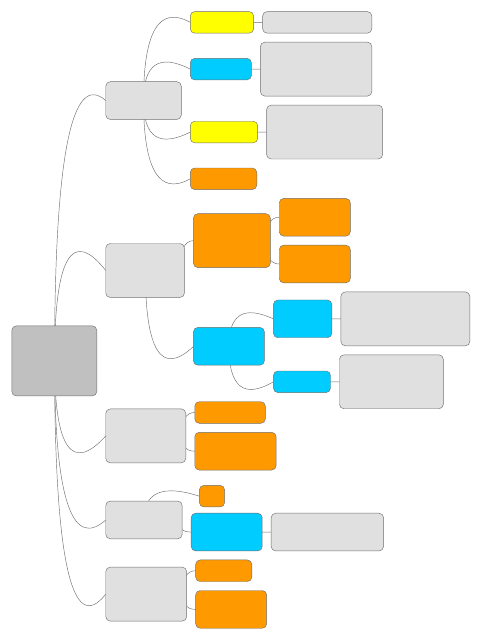

To address these questions, we adopt a three-phased approach

as shown in Figure 1. The rst phase is to develop a usability and

privacy framework, generated from the critical user reviews. If a

user expresses discontent with any functionality of the extension

in the review, we consider it as a critical aspect and classify it as a

critical review. This framework contains 11 broad categories – block,

ads, break, tracking, manual, lter, conguration, privacy policy,

compatibility, data, and performance. We select critical reviews,

using a critical score classier, as they tend to be more informative

about the problems faced by users. Our hypothesis is backed by a

study done by researchers from Colorado State University [

7

] who

found that negative information gives you more cues as compared

to the expected positive information to make a decision. Each broad

category further comprises a set of related keywords to enhance

understanding and provide instructions for building new metrics.

We use this framework to gauge the concerns users encounter while

using these extensions. We identify and address ve major user

concerns (UCs) – Performance, Web compatibility, Data and Privacy

Policy, Extension eectiveness, and Default congurations.

In the second phase, we perform topic modeling on the critical

review dataset to pinpoint important areas for extension evaluation.

We conduct a thorough literature review to determine the metrics

covered by existing benchmarking methods along with their spe-

cic measurement techniques, as illustrated later in Figure 3. Our

analysis reveals that, of the 14 metrics identied, 10 have not been

thoroughly measured or analyzed in past studies.

In the third phase, we conduct a series of experiments to evaluate

the performance of the extensions across each identied metric.

We analyze each metric in-depth and oer reasonable proxies for

the challenging metrics. For example, it is hard to measure repro-

ducible breakages due to the dynamic nature of websites and the

stochastic performance of automated crawls [

78

]. Nevertheless, our

approach oers a signicant step forward in understanding these

metrics, laying a foundation for future, exhaustive evaluations in

this domain. Our methods include techniques like web crawling,

static analysis, and le comparisons. Detailed information on these

methods is available in Section 5.

To conduct our study, we focus on the seven most popular

privacy-preserving extensions spanning two broad categories (listed

in Table 1). We select them based on their popularity as reported by

AmIUnique, Wired, and MyIP [

3

,

13

,

15

]

1

. Ad-Blockers & Privacy

Protection (Category 1) include extensions that block ads and 3rd

party trackers. Privacy Protection (Category 2) consists of exten-

sions with the main goal of enhancing user privacy by blocking

trackers. Extensions like NoScript and ScriptSafe also enhance the

privacy of users but block all the JavaScript present on a page trad-

ing o usability for privacy and security. Hence, we do not use

them in our analysis. Their performance can only be measured and

compared when used with highly curated allowlists.

Our paper has four key contributions in the space of privacy-

preserving extensions:

•

We develop a ne-tuned classier to identify and extract

user reviews with critical insights.

•

We generate a comprehensive framework for understanding

the usability and privacy concerns of users around these

browser extensions.

•

We propose a comprehensive set of metrics to analyze these

extensions and highlight the state of current research to

identify gaps.

1

Adblock was not included as Eyeo GmBH, Adblock Plus parent company, acquired

AdBlock, Inc in April 2021

From User Insights to Actionable Metrics: A User-Focused Evaluation of Privacy-Preserving Browser Extensions ASIA CCS ’24, July 1–5, 2024, Singapore, Singapore

•

We design new proxies to measure the performance of these

extensions on the novel metrics and improve a few existing

ones.

Together, these contributions provide a comprehensive taxon-

omy of user concerns, identify gaps in existing research, and enable

eective comparison of privacy-preserving extensions.

2 BACKGROUND

Browser extension or browser plugin is a small software applica-

tion that enhances the capacity or functionality of a web browser [

2

].

A browser extension leverages the same Application Program In-

terfaces (APIs) available to the website’s JavaScript, in addition to

its own set of APIs, thus oering additional capabilities. Privacy-

preserving extensions oer advanced functionality and use sensi-

tive permissions, making them an attractive target for adversaries.

Previous studies [

40

,

64

–

67

,

76

,

81

,

86

] have extensively examined

the privacy and performance of a subset of these extensions using

static analysis and web measurement. Our research focuses on a

superset of these studied extensions, ltering out the less popular,

deprecated, or acquired ones.

Review Analysis is a widely used technique for understanding

concerns in various domains and extracting useful features. Sen-

timent Analysis is often employed to aid in the process of analyz-

ing reviews. Hu and Liu [

51

] studied customer reviews, using a

small dataset of opinion words to calculate the sentiment of the

reviews. Vu et al. [

84

] developed MARK, a keyword-based frame-

work for semi-automated review analysis, employing a curated

keyword dataset tailored to specic categories. A similar approach

was adopted by Jindal and Liu [

54

]. All these approaches highlight

the importance of having an initial keyword dataset to conduct

such analyses which is lacking in the case of privacy-preserving ex-

tensions. Pang et al., Kanojia, and Joshi [

55

,

57

,

71

] highlighted the

challenges in performing sentiment analysis on user reviews from

dierent domains and providing plausible solutions. Niseno et

al. [

67

] used user reviews and ratings to study issues with Chrome

extensions.

Topic Modeling using Latent Dirichlet Allocation (LDA) [

39

] has

been extensively used in unsupervised categorization of large texts,

social media content, user reviews, and more. Topic classication

for privacy-preserving extensions has two major challenges. First,

the absence of a privacy-focused taxonomy about extension usage

limits the use of supervised learning. Second, unsupervised learning

generates noise due to the randomness of reviews, resulting in a

wide range of irrelevant topics. To address these challenges, one

can combine manual coding and analysis with LDA to enhance

precision [

41

,

61

,

69

,

80

]. Xue et al. [

85

] and Sokolova et al. [

77

]

used LDA with qualitative analysis for topic modeling on Twitter

data. Hu et al. [

52

] highlighted the limitations of LDA and manual

analysis in identifying customer complaints through hotel reviews.

Selenium [

32

] and Puppeteer [

31

] are leading automated crawlers

for web measurement and testing. Selenium is versatile, supporting

multiple languages and browsers, while Puppeteer, designed for

NodeJS and Chrome, excels in speed and advanced browser control

features. OpenWPM [

47

], another framework, is built upon Firefox

and Selenium and has been widely used in various studies for web

and privacy measurements.

Filterlists serve as an extensive, independent directory of lters

and host lists designed to block advertisements, trackers, mal-

ware, and various online annoyances [

23

]. These lists are diligently

maintained by a group of dedicated researchers who regularly

update them by adding new trackers and removing obsolete en-

tries. This involves adding new trackers that emerge daily and

removing those that become redundant. Some of the well-known

lterlists [

22

] include EasyPrivacy, EasyList, Fanboy, and Peter

Lowe’s serverlist [

30

]. Importantly, these lterlists are tailored to

address the multilingual nature of the web, oering support for

various languages to eectively handle content from dierent parts

of the world. Most modern ad-blockers leverage these lterlists to

function, using them as a foundation to block unwanted content

and enhance the user’s web browsing experience.

3 IDENTIFYING USER CONCERNS

To identify the user concerns around privacy-preserving Chrome ex-

tensions (Table 1), we extract user reviews from Chrome web store

pages of the extensions and subsequently extract usability/privacy

themes from the review dataset to generate the privacy and usabil-

ity topic framework (refer Table 5). Later, we identify ve major

user concerns from the topic framework. Only publicly available

information was scraped and no personal data or user proles were

collected or stored in this process. We also got an exemption from

the IRB to get a response from the developers of these extensions

about our analysis of their tools. In the following subsections, we

highlight the methodology used and the topics extracted.

3.1 Review Analysis

To gain valuable insights into users’ perceptions, we analyze the

reviews posted by users on the Chrome web store for the selected

extensions. We scraped the reviews and support queries for the ex-

tensions in September 2022 using Selenium with a Chrome headless

browser in incognito mode. By studying user perceptions, we gain

insights into the usability and privacy topics that concern users

when using these extensions This process involves two steps – l-

tering unimportant reviews from the review dataset and applying

topic modeling on the rened dataset.

Filtering Review Dataset. We collected over 40k user reviews

and support queries of the seven extensions (see Table 1) from the

Chrome web store. It’s essential to rene the review dataset to

minimize noise in topic modeling, ensuring a focused and stream-

lined thematic framework. Historically, prior studies [

67

,

83

] have

relied on star ratings, often sidelining higher-rated reviews un-

der the assumption that they depict satised users. However, our

preliminary investigation contradicts this notion. We discovered

numerous cases where users mentioned concerns about the prod-

uct despite awarding it with 4 or 5 star ratings. For example, the

review: “Youtube’s been updating their stu and it stopped working

at least in my region. Hope the team gets a xed or work around

soon” highlights extension’s inability to block YouTube ads despite

getting 5 stars.

Adopting the previous approach might lead to ignoring the is-

sues highlighted in these higher-rated reviews. To address this,

we categorize the reviews as critical or non-critical. A review is

labeled as critical if the reviewer is unsatised with at least one

ASIA CCS ’24, July 1–5, 2024, Singapore, Singapore Roongta et al.

Reviews

+

RQ 2 + RQ 3RQ 1

Support

Queries

Critical

Reviews

Fine-tuned

BERT Model

Manual

Analysis

Unsupervised

LDA

Literature

review

Gaps in

benchmarking

methologies

Related

keywords

Broad

categories

Measurement of

novel metricsUser concerns

1

2

3

Figure 1: The analysis unfolds in distinct stages as outlined in the ow diagram. Phase

1

○

involves developing the topic

framework using review analysis, to identify user concerns (RQ 1). In phase

2

○

, through topic modeling and literature review,

we nd gaps in the benchmarking methods and introduce novel metrics for evaluation(RQ 2). Finally, phase

3

○

involves

designing measurement experiments to evaluate the extension against the novel and existing metrics (RQ 3). The contributions

are highlighted in bold.

privacy/usability feature of the extension, even if they are generally

content with its overall performance. For example, consider the re-

view: “Awesome until your web page recognizes it =(". A non-critical

review means that the reviewer did not express any hostile opinion

about any aspect/feature of the extension. For example, review: “It’s

sooo good as it blocks every ads 100% recommend this!!!". Topic-based

examples are given in Table 5 of the Appendix.

We develop a classier to identify critical reviews by ne-tuning

the BERT classier. This technique broadly aligns with aspect-based

sentiment analysis (ABSA) [

79

]. This classier-driven automated

tool helps to lter out non-critical reviews. To build and test this

classier, we created a training and testing dataset of 620 and 130

manually annotated reviews respectively. One author along with an

undergraduate researcher independently annotated this pool of 750

reviews as either critical (assigned 0) or non-critical (assigned 1).

To remove bias, the annotators reviewed the annotations, and any

discrepancy (around 10%) in opinion was discussed and addressed.

45% of these reviews were annotated as critical and 55% as non-

critical. The decision to use critical reviews is based on the notion

that people tend to be descriptive when expressing criticism [

7

].

We ensure that there are enough reviews in the training dataset

from every extension to cover the dynamic critiques of users across

dierent extensions. We ne-tuned the Hugging Face sentiment

analysis transformers model (distilbert-base-uncased)

2

by fur-

ther training it on our manually annotated training dataset, thus

providing us with a criticality score. A positive criticality score sig-

nies that the reviewer didn’t express any feature/aspect-based

critique in the review. The resulting model achieved an accuracy

of 86.15% on our manually annotated test dataset, surpassing the

performance of the original DistilBERT model, which attained only

42.2% accuracy.

2

https://huggingface.co/distilbert-base-uncased

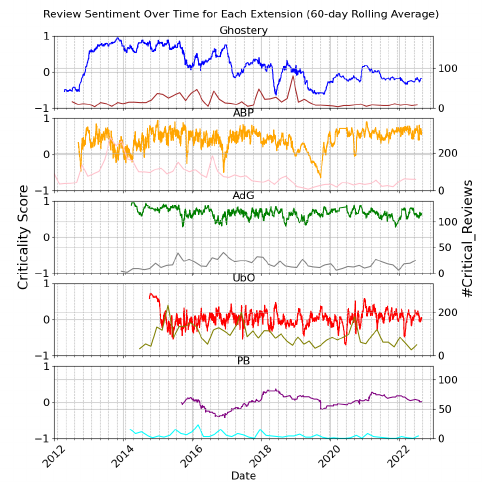

The robustness of our model is demonstrated in Figure 2. It shows

the number of reviews within each criticality score bin of size 0.1.

Our ne-tuned model classies 92.33% of the reviews as critical/non-

critical with a condence level exceeding 85%. This metric increases

to 95.24% for a condence level exceeding 75%. Thus, our ne-tuned

classier dierentiates between critical and non-critical reviews

with high condence. The non-critical-to-critical ratio is highest

for ABP and AdG, while for UbO, it is almost equal to one. This

nding is surprising, considering UbO’s popularity among privacy-

conscious individuals. One possible explanation is that privacy-

aware users write descriptive reviews, resulting in a higher number

of critical reviews for UbO. After ltering and removing out reviews

with a criticality score greater than -0.7, we get a pool of 12,572

critical reviews.

3.2 Topic Modeling

To identify broad privacy and usability categories, we qualitatively

analyze the critical reviews dataset. First, we use Latent Dirichlet

Allocation (LDA) [

63

] along with a manual literature survey to

perform unsupervised topic classication to get the initial set of

codes. The literature survey consisted of an extensive exploration

of various sources including privacy policies, web store descrip-

tions of extensions, critical review dataset and support queries,

and relevant peer-research literature on these extensions. These

codes are further rened through manual classication to identify

broad topics wherein an iterative, inductive theory-driven data cod-

ing and analysis framework is used [

42

]. In this manual modeling

process, we streamline the noise, common in LDA, by eliminating

unnecessary codes and grouping similar ones. This leads to the for-

mation of a smaller code set, rened through iterative discussions

among authors. We identify 11 broad, independent topics related

From User Insights to Actionable Metrics: A User-Focused Evaluation of Privacy-Preserving Browser Extensions ASIA CCS ’24, July 1–5, 2024, Singapore, Singapore

-1.0 -0.9 -0.8 -0.7 -0.6 .... 0.6 0.7 0.8 0.9 1.0

Sentiment Score intervals

0.0

2K

5K

8K

10K

12K

15K

18K

20K

No. of Reviews

No. of Reviews Vs Sentiment Score

ABP

UbO

Ghostery

AdG

(a) Ad-blockers and Privacy Protection

-1.0 -0.9 -0.8 -0.7 -0.6 .... 0.6 0.7 0.8 0.9 1.0

Sentiment Score intervals

0.0

100.0

200.0

300.0

400.0

500.0

No. of Reviews

No. of Reviews Vs Sentiment Score

Disconnect

PB

Decentraleyes

(b) Privacy Protection

Figure 2: Number of reviews within each 0.1 critical score

interval. Our ne-tuned model classies 92.33% of the re-

views as either critical or non-critical with a condence level

exceeding 85% (see clusters around 1 and -1). Note: Since we

use a (-1,1) annotation scale for the criticality score, there is

no review with a sentiment score between -0.5 and 0.5. Hence

that interval has been omitted.

to user privacy and usability, under which other codes are catego-

rized as subcategories or related keywords (Table 5). These related

keywords are then used to categorize reviews into each broad topic

through keyword extraction and matching [

44

], ensuring a pre-

cise and coherent classication. To establish the robustness of this

framework, we randomly selected 20 reviews from each extension’s

critical review pool and manually examined them for potential

new privacy and usability topics. As no new topics or keywords

were discovered during this process, it armed the robustness and

comprehensiveness of our initial topic framework.

User concerns From the developed topic framework, we pinpoint

ve key user concerns (UCs) by clustering together broad topics that

share similar fundamental issues. The broad topics are highlighted

in the brackets.

•

UC1 - Performance: What are the scenarios where a privacy-

preserving extension slows down system performance or

consumes excessive memory, thus aecting computer hard-

ware? (performance)

•

UC2 - Web compatibility: When does an extension break

websites or cause delays in their rendering? Is it due to anti-

adblocking scripts deployed by dierent sites, or by blan-

ket blocking of JavaScript by the extensions? (compatibility,

break)

•

UC3 - Data and Privacy Policy: How is data handled, in

stationary as well as transient form, by the extensions? Can

you trust the extension’s permissions and policy landscape?

(data, privacy policy)

•

UC4 - Extension eectiveness: How eective are the ex-

tensions in blocking online tracking? Does their failure sig-

nify that they are malicious or simply incapable? (ads, track-

ing, block)

•

UC5 - Default congurations: Which congurations and

updates of these extensions have attracted critical atten-

tion from the users and made them wary of the privacy-

preserving extensions? (conguration, manual, lter)

4 IDENTIFYING GAPS IN THE BENCHMARKS

Having identied the user concerns related to these extensions, our

next step is to assess their eectiveness in addressing these issues.

This will involve evaluating the extensions against a specic set of

metrics that comprehensively represent the user concerns. Initially,

we determine a set of metrics by referencing the related keywords

in the topic framework, which encapsulate the various topics within

each user concern. Subsequently, we review existing literature to

ascertain which of these metrics have already been explored. This

process helps us identify the metrics that remain unaddressed, form-

ing the basis for our following analysis and measurement of these

overlooked metrics.

In Figure 3, we highlight the dierent areas that concern peo-

ple in dierent categories. For each user concern identied, we

also indicate the proportion of critical reviews that are associated

with that specic concern. The metrics that have been measured in

the past are also highlighted with the literature references against

them. The measurements for the newly identied metrics are cov-

ered later in Section 5. Each of the metrics colored in Blue indicates

that there has been previous work addressing it. Orange-colored

metrics indicated that these metrics are novel and haven’t been

measured before. Yellow-colored metrics are those metrics that

have been evaluated before but could benet from enhanced mea-

surement methods. We nd eight novel metrics for measurement –

RAM Usage, Disable Adblocker, Unable to load, Permissions, Privacy

Policy and CSP, Ads, Filterlists, Acceptable Ads while we improve

measurement methods of two other metrics – Data Usage and CPU

Usage.

Performance is mentioned in 6.01% of the critical reviews. We

identied four crucial areas that users are concerned with – CPU

ASIA CCS ’24, July 1–5, 2024, Singapore, Singapore Roongta et al.

Benchmarking

privacy

preserving

extensions

Performance

(6.01%)

CPU Usage

Load Time

Web

Compatibility

(49.93%)

Data and

Privacy Policy

(2.27%)

Effectiveness

(41.76%)

Default

Configuration

(22.66%)

Data Usage

RAM Usage

Reproducible

Visible

Breakages

Permissions

Privacy Policy

and CSP

Ads

3rd Party

Tracking

Filterlists

Acceptable

Ads

Borgolte et al.('20)

Borgolte et al. ('20),

Traverso et al.('17),

Garimella et al.('17)

Borgolte et al. ('20),

Traverso et al.('17),

Garimella et al.('17)

Traverso et al.('17) ,

Garimella et al.('17)

Disable

Adblocker

Unable to

Load

Proxy based

Analysis

Extension

Detection

Breakage

Zhu et al.('18),

Nithyanand et al.('16),

Iqbal et al.('17)

Smith et al.('22),

Le et al. ('23),

Amjad et al.('23)

Figure 3: A mind map illustrating the diverse User Concerns

(UCs) and the corresponding metrics identied within each

concern. The percentage of critical reviews discussing each

concern is mentioned in brackets. For each metric, existing

literature is cited where available. The metrics are visually

dierentiated using three colors: Blue indicates metrics that

have been addressed in previous studies, Orange indicates

metrics lacking prior research, and Yellow represents met-

rics that have been studied before but could benet from

enhanced measurement methods.

Usage, Load Time, Data Usage, and RAM Usage through the related

keywords. The most recent work in measuring performance was

conducted by Borgolte et al. [

40

]. They used the perf tool to measure

CPU usage, HAR les for Load time, and downloaded page size for

Data usage on a pool of 2k live websites. Borgolte et al. [

40

] do not

measure the RAM usage and ne-grained CPU usage. Traverso et

al. [

81

] conducted a comparison study of a few tracker-blockers over

Load time, Data usage, and the number of third parties contacted

over 100 websites. Garimella et al. [

49

] studied Data usage, third-

party tracking, and Load Time of the top 150 websites. These papers

aim to analyze a selection of privacy-preserving extensions using a

predened set of metrics, focusing on a limited number of websites.

A signicant gap in the prior work has been the ignorance of

the upward shift of website content to replace the ad content to ll

the virtual display window leading to almost similar data usage in

the extension and the control case. We improve this methodology

by capturing every network packet fetched for the entire scrollable

page length. This accounts for the upward shift of page content

after ad-blocking as well as the lazy loading [

27

,

28

] of ads which

is a common technique used for page speed enhancement. Debug-

Bear [

5

] conducted a high-level study on various ad-blockers in

2021, utilizing their proprietary tools to analyze their performance

on two specic websites: The Independent and the Pittsburgh Post-

Gazette. They focused on metrics such as on-page CPU usage, the

number of network requests, browser memory usage, and the down-

load size of web pages. Although their analysis tools are not publicly

available and their study was limited to just two websites, we ob-

serve a similar pattern between their ndings on two websites and

our results on 1500 tranco websites.

For web compatibility, we nd that users most often write

about cosmetic breakages in dierent HTML elements that can

be visually observed. Web breakages are mentioned in 49.93% of

all the critical reviews. Since these visual breakages are hard to

measure due to the dynamic nature of the websites, we focus on

two categories of breakages – ’disable adblocker’ prompt detection

and ’failed to load’ website. Our ndings are in agreement with

the work done by Niseno et al. [

67

], who built a website breakage

taxonomy using web store reviews and GitHub issue reports, sub-

sequently verifying it with user experiences. According to them,

unresponsiveness and extension detection (the metrics we measure)

constitute 43.5% of all breakages.

Dierent researchers have tried to measure breakages via various

proxies without explicitly reproducing those breakages. Amjad

et al. [

37

] manually annotated 383 websites for dierent kinds of

breakages with NoScript and UbO. They used this dataset to validate

their ndings about the plausible impact of blocking functional

javaScripts on web breakages. Smith et al. [

76

] developed a classier

based on breakages caused by individual lterlist rules to predict

potential breakages. They used issue reports from Easylist to train

their classier. Le et al. [

59

] used the change in the number of images

and text on the website after installing an extension to argue about

the possibility of visible breakages. A common research gap in all

these studies is the lack of large-scale measurement of reproducible

web breakages. For detection, various researchers have used the

presence of anti-adblocking scripts on websites to argue about the

potential of websites in detecting ad-blocking [

53

,

68

,

88

] but they

do not measure the likelihood of a specic extension to get detected.

Our paper is the rst to study permissions, privacy policies,

and the impact of default congurations on user experience in de-

tail for privacy-preserving extensions. Data and Privacy policy is

talked about in 2.27% of critical reviews. Previous works by Carlini

et al. [

43

] study the overall extension permission system in detail

with a specic focus on security. Felt et al. [

48

] study the permission

landscape of Android apps. Liu et al. [

62

] and Sanchez et al. [

74

]

study the general browser extension landscape with the principle

of least privilege (PoLP) in perspective and highlight vulnerabili-

ties due to its violation. Although these papers do not specically

compare privacy-preserving extensions on permission abuse, they

do highlight the criticality of the permissions in our evaluation set.

From User Insights to Actionable Metrics: A User-Focused Evaluation of Privacy-Preserving Browser Extensions ASIA CCS ’24, July 1–5, 2024, Singapore, Singapore

Around 22.66% of critical reviews express concerns about the

default congurations in ad-blockers, a signicant issue not ex-

tensively explored by previous researchers. Our analysis goes be-

yond comparing the lter lists utilized by dierent ad-blockers; we

delve into the specics of URL-based, HTML-based, and exception

lter rules used by each. Additionally, we examine the rules of

ABP’s Acceptable Ads list and contrast them with those of other

extensions. This approach provides a detailed understanding of

ad-blocker congurations and their implications.

For the eectiveness of these extensions, users are primarily

focused on ads and 3rd party trackers being blocked. It appears

in 41.76% of the critical reviews. Roesner et al. [

73

] devised tech-

niques for detecting the tracking abilities of third-party websites

using their behavior. Siby et al. [

75

] developed an ML classier for

identifying tracking behavior robust to tracking and detecting ad-

versaries. Traverso et al [

81

] and Garimella et al. [

49

] also measured

the number of third parties contacted on a small set of websites

by measuring the HTTP requests. We perform a high-level anal-

ysis to understand the blocking eciency of privacy-preserving

extensions rather than focusing on tracker behavior.

In the following section, we highlight the measurement methods

and the subsequent results.

5 MEASUREMENTS

We formulate our measurement strategy to measure the new met-

rics identied in Section 4. To gather data for comparison, we use

Selenium [

32

] and Puppeteer [

31

] based crawlers to visit websites.

It is important to note that all measurements are conducted using

extension versions dated May 27, 2023.

Website testing pool. To capture the full extension activity, it is

important to evaluate the extensions on both the landing pages and

inner pages of websites. Inner pages often contain more content,

scripts, and ads compared to the landing page, making them valu-

able for our experiments. While Hispar [

38

], a database of internal

pages, could be useful, it does not specically focus on inner landing

pages with high content. To build a suitable website testing pool,

we select 1500 websites from the Tranco list [

60

]. This pool contains

the top 1000 websites from the Tranco list and then one website for

every next 500 websites, allowing us to capture a broader spectrum

of websites, as lower-ranked websites may exhibit dierent behav-

iors compared to higher-ranked ones. This website pool is referred

to as basic testing po ol. We ensure that only one website (the most

popular) belonging to a unique second-level domain is included,

as the base policies generally do not change within the websites

of the same organization. For example, we omit www.google.co.in

since we already included www.google.com.

After ltering out unreachable websites we extract the three

longest same-origin href links from the websites that have more

than 10 href links in total. This process leaves us with our inner

page testing pool consisting of 476 websites without any inner pages

and 834 websites with three inner pages. By choosing the longest

href strings, we increase the likelihood of selecting actual inner

pages rather than landing pages’ sections. We lter out websites

with less than 10 href links on their landing page, as they tend to

have only promotional or informational links and lack a lot of inner

pages. Although this methodology for nding the inner pages of a

website is not ideal, it provides us with a fair number of inner pages.

The threshold of 10 href links is qualitatively decided by observing

a sudden drop in the number of websites with more than 10 href

links, followed by a consistent pattern afterward.

In the following subsections, we discuss the measurements con-

ducted, the results obtained, and how our observations compare

with the prior work. We use the Google Chrome browser for our

experiments because of its popularity (market share of over 60%

as of 2023). All measurements are done within docker containers,

on an AMD EPYC 7542 128-core machine from a vantage point in

the USA. We perform all experiments on Chrome version 113 for

reproducibility. All webpages are visited three times to account for

DNS caching and the average result for the metric is reported.

5.1 UC1: Performance

Performance is a crucial metric when it comes to evaluating browser

extensions as it’s discussed in over 750 critical reviews. One of the

user reviews for example highlighted:

“Consumes way too much ram and processing power,

even while browsing websites that contain zero ads.”

To measure performance, we focus on the following metrics: CPU

usage, Data usage, and RAM usage. Although the rst two metrics

have been studied beforehand, we propose an improved approach

to measure them and hence report our ndings. Website Load time

is a crucial metric; however, we do not assess it in our study as it

has been eectively measured in previous research [40, 81].

For measuring CPU usage, the containers are congured to run on

a single core, allowing us to accurately monitor CPU performance.

We employ the ‘mpstat’ functionality instead of the perf tool utilized

in the work by Borgolte et al. [

40

] to measure CPU usage. Instead

of providing overall clock time, ‘mpstat’ provides us with the CPU

occupancy in the user and kernel space for the duration of the

process averaged over the given tick size of one second. We collect

these statistics for the duration of the website load time plus an

additional two seconds to allow the CPU to cool down after browser

closure. Since these extensions do not spend signicant time in the

kernel space, we report the dierence between the average value

for the overall CPU usage from the control case (or no extension

case) for each extension.

To measure Data usage, we set up a browsermob proxy [

17

] to

intercept all request and response packets while accessing the inner

page website pool via Selenium. We calculate the average of all the

‘Content-Length’ header elds for every website in the inner page

testing pool across all inner pages. We then report the dierence

between the data usage measured with the extension enabled and

the data usage in the control case. To account for the upward shift

of content and lazy loading of ads [

27

,

28

], we visit a web page and

scroll down to the end of the page at a constant speed to capture

all possible network requests from the web page.

For RAM usage measurement, the websites in the basic testing

pool are run inside docker containers with a restricted memory

limit of 4GB. We extract the RAM usage data from the ‘docker stats’

functionality provided by the host machine every 1.5 seconds and

report the dierence of average values observed during the load

time for each extension from the control case.

ASIA CCS ’24, July 1–5, 2024, Singapore, Singapore Roongta et al.

Table 2: Data for multiple metrics for every extension. Mean and corresponding standard deviation across all websites is shown

for each extension in the performance, frames, and third-party metric. For permissions:

denotes not requested,

è

denotes

present but not justied i.e. the permission should not be requested or an alternative less powerful permission should be used,

and

○

represents that the permission is requested in a justied and reasonable way. For policy,

denotes that the policy is

absent,

denotes the policy does not address all the GDPR concerns as covered in PrivacyCheck, and

represents either the

policy complies with the GDPR concerns as covered in PrivacyCheck or claims to not collect any data.

Performance Break Storage Frames 3rd Party Permissions Policy

Extension

CPU Usage (in %)

(Mean (SD))

Data Usage (in MB)

(Mean (SD))

RAM Usage (in MB)

(Mean (SD))

detect

hang

localStorage

(in MB)

IndexedDB

(in MB)

Mean (SD)

Mean (SD)

privacy

all-urls

unlimitedStorage

cookies

notications

Privacy Policy

CSP

ABP

13.7

±12.2

0.0

±2.2

185.7

±115.2

25 4 0.084 19.0

-4.8

±15.2

-40.0

±134.0

è è ○

UbO

9.8

±9.4

-1.1

±13.8

24.9

±101.6

15 1 13.0 0.0

-0.2

±3.8

-76.6

±179.9

○ è ○

PB

2.3

±10.0

-0.0

±2.4

53.3

±103.6

18 3 1.092 0.0

-7.9

±18.3

-67.0

±170.8

○

Decentraleyes

3.8

±9.6

0.3

±2.3

38.5

±80.4

14 2 0.124 0.0

-0.1

±3.7

1.7

±48.5

○ è

Disconnect

10.0

±9.6

-0.1

±2.5

132.4

±107.3

19 2 0.150 0.0

-7.8

±18.0

-67.2

±171.1

Ghostery

0.4

±11.5

-0.2

±3.9

90.1

±102.3

15 4 0.712 6.8

-0.4

±5.0

-3.4

±60.7

○

AdG

5.2

±11.7

-0.9

±15.3

119.9

±104.3

20 8 4.412 0.0

-6.8

±18.5

-55.9

±163.9

è è ○ ○

CPU Usage (avg)

(in percentage)

−30

−20

−10

0

10

20

30

40

Data Usage

(in MB)

−3

−2

−1

0

1

2

RAM Usage (avg)

(in MB)

0

100

200

300

ABP UbO PB Decentraleyes Disconnect Ghostery AdG

Figure 4: Dierent performance metrics against every exten-

sion. The reported values are dierences between the value

recorded with the extension and the control case over the

inner website pool. The doed line represents the median of

the data. Negative values signify better performance com-

pared to the control case whereas positive median values

signify poorer performance compared to the control case.

Results. Figure 4 represents the performance metrics calculated

for each extension using boxplots. The plotted values depict the

dierence between the metrics recorded when the corresponding

extension is installed and the control case. Negative median values

signify better performance compared to the control case whereas

positive median values signify poorer performance. The outliers

have not been plotted in this graph, but are depicted in Figure 6

(refer Appendix), showing the signicantly high metric values for

a few websites.

In terms of overall CPU usage, a majority of the extensions show

positive median dierences from the control case, indicating that

they do not improve CPU performance. ABP also has a signicant

positive dierence. PB and Ghostery perform slightly better than

other extensions included in the analysis. The prior work [

40

] sug-

gests that ABP performs less eectively in this metric compared to

PB and Ghostery, which show similar performance levels to UbO

with minimal performance enhancement. However, our granular-

level ndings suggest that Ghostery and PB slightly impact per-

formance but still outperform other extensions. The variation ob-

served in PB’s performance could be linked to its comprehensive

pre-training process [

1

], which blocks trackers from installation.

This aspect of PB has seen considerable improvement in recent

years.

Data usage exhibits a median value of almost 0 for all extensions,

implying no signicant dierence between data transferred in the

extension case compared to the control case. This might be due

to additional network requests made by the ad-blockers and also

From User Insights to Actionable Metrics: A User-Focused Evaluation of Privacy-Preserving Browser Extensions ASIA CCS ’24, July 1–5, 2024, Singapore, Singapore

websites updating their content post-blocking. Refer to Figure 6 for

the outliers. Most ad-blockers have a negative average value for the

dierence in data usage, with UbO showing the largest negative

value. AdG has the highest inter-quartile spread amongst the ad-

blockers indicating that it aects data usage for a certain number

of websites. ABP has a positive average value of 0.2, indicating that

it transfers an additional 0.2MB of data on average.

RAM usage emerges as one of the most pressing concerns among

users. UbO is the only ad-blocker that has the least positive median

RAM usage dierence, requiring an average of only 24.92 MB of

additional RAM. In contrast, other ad-blockers consume signicant

amounts of RAM, with ABP being the worst performer, utilizing

an additional average of 185.68 MB of RAM and a maximum RAM

value of 315 MB.

5.2 UC2: Web compatibility

Web compatibility is a signicant consideration when assessing the

tradeo between the security and usability of these extensions as

in this review:

“I second the request for a quick way to ’Pause’ or ’En-

able/Disable’ µBlock. I nd that there are quite a few

sites that it breaks and it is easier to just turn it o

temporarily and reload the site than to try to gure out

what is breaking.”

To determine a website’s behavior of prompting users with a

‘disable extension’ dialog box, we run a Selenium crawler in virtual

display mode on the inner page testing pool. This approach helps

us to capture the dierent behaviors exhibited by websites towards

extensions on their inner pages in addition to their landing pages.

This discrepancy may be attributed to websites aiming to preserve

ads on high-trac and high-content pages by resisting ad ltering.

During the crawling process, we visit websites, including their

inner pages, both with and without the extensions. We inspect the

source code of every frame for the presence of ad-blocker detector

keywords such as ‘adblock:detect’ or ‘disable ad blocker’. These

keywords or their subtle variations often appear in alert boxes

or iframes on the web page. Therefore, we visit each frame on

the page and search for these specic keywords. We report the

number of websites where such keywords are detected, indicating

the websites that actively detect the presence of ad-blockers using

basic JavaScript or prompt users to disable them. Additionally, we

measure the number of websites that experience page hangs in the

presence of extensions. A website is considered to hang if it exceeds

a load time threshold of 60 seconds with the extensions enabled,

while it loads within an acceptable timeframe in the control case

without extensions. We selected this threshold because load times

exceeding a minute are typically impractical in real-world scenarios

where load times are measured in milliseconds.

Results. Table 2 provides an overview of the two categories of

website breakages observed with the extensions. The category “de-

tect” indicates the number of websites that either employ a basic

adblock detector JavaScript or display a prompt asking users to

disable their ad-blocker. The category “hang” represents the num-

ber of websites that take more than 60 seconds to load when the

extensions are present. Based on our data, ABP and AdG are the

most detectable extensions with 25 and 20 websites detecting them

respectively. It is worth noting that ABP triggers the ad-blocker

disable prompt on a signicant number of websites. This nding

suggests that ABP is more detectable, and websites are more willing

to disable it, despite using the acceptable ads exception list.

For websites taking more than 60 seconds to load in the presence

of extensions, the data reveals that there are not many instances

of such behavior. AdG is associated with the highest number of

websites (8) experiencing hanging or delayed loading. This num-

ber increases substantially for lower thresholds. For example, 22

websites take more than 30 seconds with ABP installed while only

2 websites take that much time with UbO. It is important to con-

sider that this behavior is not solely dependent on the presence

of extensions. Many websites have mechanisms in place to detect

automated crawling, resulting in dierential behavior. Manual ver-

ication of these websites was performed to ensure consistency

in the data. Although the actual Load time during manual crawls

varied from the automated crawls, the relative pattern remained

the same i.e. websites taking longer times to load in automated

crawls showed similar behavior with manual crawls.

5.3 UC3: Data and Privacy Policy

We identied many critical reviews about permissions, data stor-

age, data prefetching, privacy & content security policies, and web

anonymity. For example:

“In the latest update my chrome prompted me that the

new p ermission "Full History Access" was added. Why?”

While these extensions are cautious in their permission requests,

even slight oversights can violate the Principle of Least Privilege

(PoLP). Considering the extensive range of permissions required

by these extensions, such oversights can occur frequently. To gain

a deeper understanding of these issues, we conduct a qualitative

analysis of the extension’s permission landscape.

Permissions. In our evaluation of the extensions, we focus on ve

specic permissions: privacy, unlimitedStorage, all-urls, notications,

and cookies. Our goal is to determine if the permissions requested by

the extensions are necessary for the tasks they perform. Regarding

data storage capabilities, we explore two commonly used tech-

niques: localStorage and IndexedDB. LocalStorage and IndexedDB

are commonly used by extensions to store various data objects re-

quired for their functionality. Refer to their documentation [

20

,

26

]

for in-depth information about their functionality.

As per Chrome documentation [

20

], the all-urls permission

matches any URL that starts with a permitted scheme (http:, https:,

le:, ws:, wss: or ftp:). The privacy permission enables network

prefetching, webRTC blocking, etc., and is considered sensitive. It

triggers a warning message that it “can change your privacy-related

settings,” which has drawn attention from users and raised concerns

about its usage. The cookies permission gives extensions access to

the chrome.cookies API. The notications permission enables ex-

tensions to create and show notications.

Privacy policy and CSP. Some of the extensions in our study

lack clearly dened privacy policies, even though they are available

in the European region, which falls under the jurisdiction of the

General Data Protection Regulation (GDPR) [

33

]. Given that these

extensions process various aspects of PII associated with users, they

must have well-dened privacy policies, at least in principle. To

ASIA CCS ’24, July 1–5, 2024, Singapore, Singapore Roongta et al.

assess and compare the privacy policies of these extensions, we

use the PrivacyCheck extension developed by Zaeem et al. [

87

]. It

enables us to summarize and evaluate the privacy policies of the

extensions under consideration. Additionally, we also examine the

content security policies (CSP) implemented by these extensions

via source code analysis.

Results. Permissions. The results of the source code exami-

nation of the extensions are shown in Table 2. unlimitedStorage

permission is requested by four extensions but is only used by UbO

and AdG. Table 2 provides information on the local storage space

utilized by each extension–only UbO exceeds the 5 MB limit. Some

extensions argue that this permission is necessary because users

can add large lterlists that may surpass the limit. However, exten-

sions like Ghostery and PB allow lterlists without requiring this

permission, demonstrating that alternatives exist. Moreover, the

inability to optionally use this permission becomes an easy justi-

cation for the extension developers to request it, even though it

serves a tiny population of users who might import large lterlists.

all-urls permission is requested by ABP, UbO, and AdG. How-

ever, Chrome and Mozilla disabled FTP support in their respective

browsers in 2021 [

4

]. Additionally, the limited number of "le:"

based URLs are usually static so there appears to be minimal scope

for these extensions to block content on those web pages. Therefore,

it is recommended to use strict URL matching for "http:" and "ws:"

protocols only.

During our review study, many users expressed dissatisfaction

with receiving annoying and intrusive notications. Some noti-

cations are a result of the extensions’ inability to block them,

while others are self-generated by the extensions. ABP uses this

permission to redirect users to their donation and review pages,

respectively. Considering the critical user sentiment towards these

notications, extensions might consider providing this information

in their descriptions instead. The cookies permission is requested

by Ghostery and AdG. Ghostery requests this permission to block

cookies and check for logged-in users. AdG uses it to remove cook-

ies that match its lterlist rules. Overall, the usage of this permission

by these extensions seems to be for benign purposes, aligning with

their functionality and privacy objectives.

Among the four extensions that request the privacy permission

(UbO, AdG, PB, and Decentraleyes), AdG is the only extension that

lists it as an optional permission. The primary reason for request-

ing this permission is to disable network prefetching

3

, hyperlink

auditing

4

, and block WebRTC requests

5

.

Decentraleyes, PB, and UbO use this permission to disable prefetch-

ing. PB and UbO also use it to disable hyperlink auditing. Addi-

tionally, PB employs this permission to block alternate error pages,

where setting the policy to True allows Google Chrome to use built-

in alternate error pages (such as “page not found”). AdG uses this

permission to block WebRTC functionality. However, UbO recently

removed the WebRTC blocking option, as both Chrome and Firefox

no longer leak private IP addresses [

6

]. Requesting this permission

solely for WebRTC blocking, as done by AdG, may be unnecessary.

3

developer.mozilla.org/en-US/docs/Web/Performance/dns-prefetch

4

www.thewindowsclub.com/ping-hyperlink-auditing-in-chrome-refox

5

developer.mozilla.org/en-US/docs/Web/API/WebRTC_API

Privacy Policy and CSP. Some extensions lack a privacy pol-

icy or claim no data collection, operating solely on the client side.

We used PrivacyCheck for 10 GDPR checks on the remaining ex-

tensions’ policies. None inform users of data breaches. ABP and

AdG restrict PII use from minors under 16. Most extensions, except

Disconnect, comply with other GDPR sections covered by Priva-

cyCheck. Regarding the handling of PII categories, ABP complies

with COPPA, allowing users to opt out in case their privacy pol-

icy changes. Disconnect lacks user data control, such as editing

collected information. While all extensions collect PII like email

addresses, none gather sensitive data like SSNs or credit card details.

All extensions may disclose information to government authorities

as legally required, such as subpoenas.

In terms of Content Security Policy (CSP) implementation, UbO,

Ghostery, and AdG use CSP directives in their manifest les. These

extensions congure the ‘script-src’ and ‘object-src’ elds to ‘self’,

allowing local plugin content and script origins. Ghostery imple-

ments ‘wasm-eval’, which may be considered unsafe and is not

included in MDN’s CSP directives [19].

5.4 UC4: Extension Eectiveness

This user concern raises critical questions about the improvements

oered by the extensions in web browsing. It encompasses two

main categories: ads and tracking.

“double click advertising is tracking me even blocked

what can i do?? Help block them! I also get a lot of

Google analytics and google platforms should i trust

them or what??”

We address each category individually, assuming that the websites

are rendered without breakage and that increased ltering indicates

better performance. These measurements are high-level proxies to

provide us insights into how many ads and third parties might have

been blocked by these extensions without measuring advanced ad-

versaries that employ extension evasion strategies. For calculating

both Ads and third parties, we visit each website in the inner page

testing pool, three times, and report the average dierence between

the extension and the control case. This approach accounts for the

dynamic nature of the websites.

Ads. To evaluate the eectiveness of ad-blocking, we measure the

reduction in the number of frames on web pages. Advertisements

are often displayed within HTML frames, which display content

independent of its container. Many HTML tags that contain ad

scripts are rendered as frames and iframes. Eective ad-blockers

remove the frame objects from the page layout as part of the ltering

process. We use Puppeteer to hook into the web page and calculate

the number of frames using the page.metrics() function.

Third parties. A 3rd-party website domain refers to the domain

or web address of a website that is operated and owned by a sep-

arate entity or organization distinct from the owner/operator of

the primary website. Most trackers can be classied as a 3rd party

domain. OpenWPM [

47

] provides us with a mechanism to calculate

the number of 3rd parties contacted during a website crawl.

Results. Table 2 shows the average and standard deviation values

obtained from our data collection for each crawl. The mean value

represents the average reduction in the recorded metric. A higher

From User Insights to Actionable Metrics: A User-Focused Evaluation of Privacy-Preserving Browser Extensions ASIA CCS ’24, July 1–5, 2024, Singapore, Singapore

negative mean value suggests that the extension is more eective

at blocking ads and third-party content.

Ads. UbO and Ghostery perform the least eectively, blocking only

0.2 and 0.4 frames on average. PB performs well by blocking an

average of 7.9 frames.

Third parties. UbO performs best, blocking an average of 77 third

parties, while Ghostery has the lowest eectiveness, blocking only

3.4 third parties. Decentraleyes actually increases the number of

third parties contacted on average.

5.5 UC5: Default Congurations

Default congurations are signicant user concerns that encompass

categories such as manual settings, lters, and congurations. For

example:

“Great app, but it lacks default blocking options. There

are services I want to block everywhere and there are

services I don’t want to block at all (e.g. facebook).”

Many users found the necessity to manually congure allowlists

or adjust extension settings to be bothersome. This aligns with

the common understanding that users typically prefer to use tools

with their default congurations [

21

,

29

]. Quantifying this through

automated testing is challenging. We examine the default lter lists

used by ad-blockers and measure the dierent URLs and HTML

elements blocked by these lists along with the exception rules.

We identify the lterlists imported by the extensions and calcu-

late the number of third-party domains allowed by the Acceptable

Ads exception list of ABP that are blocked by other extensions. The

presence of Acceptable Ads is a signicant concern for users, as

they may encounter ads on websites despite having ad-blocking

extensions installed. This discrepancy in ad-blocking eectiveness

can lead to user dissatisfaction, as is evident in one of the ABP’s

user reviews (e.g., Review: They used to block adds but now their

"acceptable adds" are just as bad as what they used to block.). We

focus specically on third-party domains allowed by the Acceptable

Ads list, excluding any HTML tags or cookies.

Table 3: Number of URL-based, HTML-based and exception

lter rules used by the extensions in their default state. PB

and Disconnect neither have lter rules for HTML-based

elements nor Exception rules.

Extension URL-

based

lter rules

HTML-

based

lter rules

Exception

rules

ABP 75992 293403 13117

UbO 43202 22808 3088

PB 2171 - -

Disconnect 2506 - -

Ghostery 75317 38249 5328

AdG 263912 117607 13364

Results. Filterlists. ABP, UbO, and Ghostery use popular l-

terlists such as Easylist and Easyprivacy. Ghostery and UbO also

import Peter Lowe’s lterlist and Fanboy, and UbO has its addi-

tional lists as well. AdG uses its own set of desktop and mobile

lterlists. PB begins with a seed le to calibrate its third-party track-

ing algorithm. Disconnect’s lterlist was last updated in 2019 before

the transition to a paid service. Decentraleyes maintains a local

copy of popular JS libraries to prevent tracking by popular CDN

servers. Table 3 shows the number of URL-based lter tiles, HTML

elements-based lter rules, and exception rules for each extension

in their default mode. UBO and Ghostery have a similar number

of lter rules while ABP has signicantly fewer lter rules among

the lterlist-based extensions. Also, ABP has a signicantly high

number of exception rules due to the Acceptable Ads list. AdG has

the highest number of lters as well as exception rules because it

uses its own big set of lterlists for blocking.

Acceptable Ads. Many users express concerns about the use of

the Acceptable Ads campaign and the corresponding allowlist used

by ABP. While other extensions also allow certain URLs for com-

patibility purposes, the Acceptable Ads allowlist aims to support

advertisers who adhere to the Acceptable Ads Standard policies [

18

].

This list contains a total of 10,934 exception URLs that are allowed.

To assess the treatment of these URLs by other extensions, we focus

on AdG, UbO, and Ghostery since they use dierent lterlists to

block ads. From the Acceptable Ads allowlist, we nd that UbO,

AdG, Ghostery block 277, 442, 293 URLs and allow 143, 258, 150

URLs respectively. The remaining URLs from the Acceptable Ads

allowlist are not mentioned in the blocked or allowed sections of

the respective lterlists.

6 SUMMARY

In our work, we initially pinpoint user concerns regarding privacy-

preserving extensions by analyzing reviews from the Chrome Web

Store. This analysis leads to the creation of a framework focusing on

usability and privacy topics, revealing ve key user concerns. From

this framework, we identify 14 critical metrics for evaluating these

extensions. We discover that existing literature does not address

10 out of these 14 metrics eectively. To bridge this research gap,

we design measurement methods and apply them to evaluate the

performance of various extensions on these metrics.

In Table 4, we summarize our ndings for each metric analyzed.

We focus on major metrics evaluated in this paper and mention

the ideal and subpar extensions. As observed here, it is challenging

for the existing pool of extensions to address all user concerns but

can help them to evaluate the extensions based on their priorities.

Table 4 underscores several novel metrics such as Data Usage, RAM

usage, permissions, reproducible detection, unresponsiveness, etc.

which are essential for assessing privacy-preserving extensions.

This evaluation, encompassing a broad range of user concerns,

enables individuals to make informed decisions about which exten-

sion or combination of extensions to install, based on their specic

priorities and areas of interest.

7 DISCUSSION

Recommendations. We reached out to the developers of all the

extensions regarding the instances of permission abuse. We got an

unsatisfactory response from the developers of a few extensions

citing legacy support as the reason for the continuation of using

such permissions. We have the following recommendations for the

developers:

ASIA CCS ’24, July 1–5, 2024, Singapore, Singapore Roongta et al.

Table 4: Summary of extensions’ performance on dierent

metrics. Ideal represents the best-performing set of exten-

sions, and Subpar represents poorly performing extensions.

Abbreviations: D’nect-Disconnect, Gh’ry-Ghostery.

Metrics Ideal Subpar

CPU usage Gh’ry ABP

Data Usage UbO ABP

RAM usage UbO ABP

ad-blocker

detection prompt

UbO

Gh’ry

ABP

Permissions Gh’ry AdG

Privacy

Policy

UbO

ABP

Gh’ry

Ads PB

D’nect

UbO

3rd-party UbO

PB

D’nect

ABP

Default

lterlists

AdG ABP

•

Maintain a version database to support legacy browsers

while shipping the most secure version in the web store.

•

Develop a sound issue reporting forum for users to report

issues with the extension usage.

•

Work with browser vendors to design permission APIs ad-

hering to security and privacy principles.

In our research, we identify certain limitations and complex

issues, which merit further attention from fellow researchers in the

eld of security and privacy. We highlight them here.

Reviews. We face two signicant challenges with the review dataset.

First, we need a method to dierentiate fake reviews from genuine

ones [

34

] and prevent the impact of review mills [

35

]. This issue is

challenging to address as there are no accurate verication meth-

ods other than Google’s proprietary machine learning techniques,

while other tools like Fakespot [

36

] are limited to Amazon, Walmart,

and eBay reviews. Second, the reviews are spread over a period of

10 years, which introduces concerns that may no longer be rele-

vant. Also, the uneven and sporadic distribution of reviews across

dierent periods makes it hard to track shifts in topics over time.

To address this, we conduct a measurement study to determine

if the concerns identied are still relevant and have a noticeable

impact. Also, by considering only critical reviews, we ensure that

short reviews (less than 5 words), that do not mention any aspect of

the extensions and simply are used to increase overall star ratings,

are ltered out. For more details on the temporal shift of critical

sentiments, refer to Appendix A.

Breakages. Measuring website breakages deterministically is a

hard problem. They depend on several factors operating in tandem,

and isolating them is dicult. While Niseno et al. [

67

] oers a

foundational taxonomy of breakages, there remains a gap in map-

ping them to their respective causes. Existing GitHub issue reports

of website breakages are dicult to rely upon as popular sites are

dynamic and get patched quickly. Our work tries to address a few

of these challenges by building a taxonomy of types of breakages

around privacy-preserving extensions and testing a few of them like

detection and unresponsiveness which can be measured determin-

istically. Due to the dynamic nature of the websites, the breakages

are hard to measure as they get patched quickly and might not

appear reliably across dierent testing environments. Our future

work focuses on detecting visible and non-visible breakages on

websites using AI-assisted crawling and manual analysis.

Fingerprint. Fingerprinting of users in the presence of extensions

is a complex topic. Although the change in the user ngerprint due

to a single attribute is often studied, analyzing the eect on the

entire ngerprinting landscape of a user is tough since it depends

on multiple factors like the strength of the adversary, the inter-

dependence of dierent attributes, etc. EFF’s tool [

46

] considers

dierent attributes but does not incorporate their interdependence.

Future work should aim at studying the collective impact of each

web attribute on the user’s entire web footprint.

Benchmarking framework. Each user concern in our analysis

is distinct and has the potential to be studied as an independent

research topic. The benchmarking process does not have a stan-

dardized set of measurement methods for every concern. This is

evident in our work where the depth of evaluation for each user

concern varies. For example, we conduct a detailed evaluation of

the ‘performance’ user concern due to the precise mention of var-

ious performance-related issues in user reviews. Conversely, the

‘break’ concern is evaluated supercially due to the vagueness of

user reviews and the complexity of evaluation methodologies.

8 CONCLUSION

Our study emphasizes the importance of a detailed assessment of

user concerns to enhance the ecacy of privacy-preserving browser

extensions. We identify 14 key metrics that impact user experience

with these extensions. Our extensive literature review reveals that

10 of these metrics lack proper measurement methods or could ben-

et from enhanced measurement techniques. We’ve developed new

methods and improved existing ones to evaluate the performance of

extensions across these 10 metrics. Our ndings are summarized in

Table 4, where we distinguish between ideal and subpar extensions

for each metric. Additionally, we point out intricate aspects of ex-

tension benchmarking, such as website breakages, that need further

exploration. This research lays the groundwork for future studies

in enhancing ad-blocking and privacy protection extensions.

The centralized code repository for our project can be found

here

6

. This repository includes the code for the measurements. We

also release the ne-tuned BERT model

7