Legal Notice

©

Cloudera Inc. 2024. All rights reserved.

The documentation is and contains Cloudera proprietary information protected by copyright and other intellectual property

rights. No license under copyright or any other intellectual property right is granted herein.

Unless otherwise noted, scripts and sample code are licensed under the Apache License, Version 2.0.

Copyright information for Cloudera software may be found within the documentation accompanying each component in a

particular release.

Cloudera software includes software from various open source or other third party projects, and may be released under the

Apache Software License 2.0 (“ASLv2”), the Affero General Public License version 3 (AGPLv3), or other license terms.

Other software included may be released under the terms of alternative open source licenses. Please review the license and

notice files accompanying the software for additional licensing information.

Please visit the Cloudera software product page for more information on Cloudera software. For more information on

Cloudera support services, please visit either the Support or Sales page. Feel free to contact us directly to discuss your

specific needs.

Cloudera reserves the right to change any products at any time, and without notice. Cloudera assumes no responsibility nor

liability arising from the use of products, except as expressly agreed to in writing by Cloudera.

Cloudera, Cloudera Altus, HUE, Impala, Cloudera Impala, and other Cloudera marks are registered or unregistered

trademarks in the United States and other countries. All other trademarks are the property of their respective owners.

Disclaimer: EXCEPT AS EXPRESSLY PROVIDED IN A WRITTEN AGREEMENT WITH CLOUDERA,

CLOUDERA DOES NOT MAKE NOR GIVE ANY REPRESENTATION, WARRANTY, NOR COVENANT OF

ANY KIND, WHETHER EXPRESS OR IMPLIED, IN CONNECTION WITH CLOUDERA TECHNOLOGY OR

RELATED SUPPORT PROVIDED IN CONNECTION THEREWITH. CLOUDERA DOES NOT WARRANT THAT

CLOUDERA PRODUCTS NOR SOFTWARE WILL OPERATE UNINTERRUPTED NOR THAT IT WILL BE

FREE FROM DEFECTS NOR ERRORS, THAT IT WILL PROTECT YOUR DATA FROM LOSS, CORRUPTION

NOR UNAVAILABILITY, NOR THAT IT WILL MEET ALL OF CUSTOMER’S BUSINESS REQUIREMENTS.

WITHOUT LIMITING THE FOREGOING, AND TO THE MAXIMUM EXTENT PERMITTED BY APPLICABLE

LAW, CLOUDERA EXPRESSLY DISCLAIMS ANY AND ALL IMPLIED WARRANTIES, INCLUDING, BUT NOT

LIMITED TO IMPLIED WARRANTIES OF MERCHANTABILITY, QUALITY, NON-INFRINGEMENT, TITLE, AND

FITNESS FOR A PARTICULAR PURPOSE AND ANY REPRESENTATION, WARRANTY, OR COVENANT BASED

ON COURSE OF DEALING OR USAGE IN TRADE.

Cloudera Runtime | Contents | iii

Contents

Upgrading Ozone overview..................................................................................... 6

Backing up Ozone................................................................................................................................................ 6

Upgrading Ozone parcels..................................................................................................................................... 6

Ozone S3 Multitenancy overview (Technical Preview).........................................7

Prerequisites to enable S3 Multitenancy..............................................................................................................7

Enabling S3 Multi-Tenancy................................................................................................................................. 7

Tenant Commands................................................................................................................................................8

Multi Protocol Aware System overview.................................................................9

Upgrading this feature from older Ozone version to 7.1.8..................................................................................9

Files and Objects together..................................................................................................................................10

Bucket Layout.....................................................................................................................................................10

Ozone FS namespace optimization with prefix..................................................................................... 12

OBS as Pure Object Store......................................................................................................................15

Configuration to create bucket with default layout........................................................................................... 15

Performing Bucket Layout operations in Apache Ozone using CLI.................................................................16

FSO operations....................................................................................................................................... 16

Object Store operations using AWS client............................................................................................ 17

Object Store operations using Ozone shell command........................................................................... 18

Ozone Ranger policy..........................................................................................................................................18

Ozone Ranger Integration..................................................................................... 18

Configuring a resource-based policy using Ranger...........................................................................................19

Erasure Coding overview.......................................................................................21

Enabling EC replication configuration cluster-wide..........................................................................................21

Enabling EC replication configuration on bucket..............................................................................................22

Enabling EC replication configuration on keys or files.................................................................................... 22

Container Balancer overview................................................................................ 23

Container balancer CLI commands....................................................................................................................23

Configuring container balancer service..............................................................................................................23

Activating container balancer using Cloudera Manager....................................................................................24

Managing storage elements by using the command-line interface.................... 25

Commands for managing volumes.....................................................................................................................25

Assigning administrator privileges to users...........................................................................................27

Commands for managing buckets......................................................................................................................28

Commands for managing keys...........................................................................................................................29

Using Ozone S3 Gateway to work with storage elements...................................31

Cloudera Runtime | Contents | iv

Configuration to expose buckets under non-default volumes............................................................................31

REST endpoints supported on Ozone S3 Gateway........................................................................................... 31

Configuring Ozone to work as a pure object store............................................................................................32

Access Ozone S3 Gateway using the S3A filesystem.......................................................................................32

Accessing Ozone S3 using S3A FileSystem..........................................................................................33

Examples of using the S3A filesystem with Ozone S3 Gateway..........................................................36

Configuring Spark access for S3A.........................................................................................................36

Configuring Hive access for S3A.......................................................................................................... 38

Configuring Impala access for S3A.......................................................................................................39

Using the AWS CLI with Ozone S3 Gateway.................................................................................................. 40

Configuring an https endpoint in Ozone S3 Gateway to work with AWS CLI.....................................40

Examples of using the AWS CLI for Ozone S3 Gateway.................................................................... 41

Accessing Ozone object store with Amazon Boto3 client................................... 43

Obtaining resources to Ozone............................................................................................................................43

Obtaining client to Ozone through session........................................................................................................43

List of APIs verified...........................................................................................................................................44

Create a bucket....................................................................................................................................... 44

List buckets.............................................................................................................................................44

Head a bucket......................................................................................................................................... 44

Delete a bucket....................................................................................................................................... 44

Upload a file...........................................................................................................................................45

Download a file...................................................................................................................................... 45

Head an object........................................................................................................................................45

Delete Objects.........................................................................................................................................45

Multipart upload......................................................................................................................................45

Working with Ozone File System (ofs).................................................................46

Setting up ofs......................................................................................................................................................46

Volume and bucket management using ofs.......................................................................................................47

Key management using ofs................................................................................................................................48

Working with Ozone File System (o3fs)...............................................................49

Setting up o3fs....................................................................................................................................................49

Ozone configuration options to work with CDP components............................ 50

Configuration options for Spark to work with Ozone File System (ofs)...........................................................50

Configuration options to store Hive managed tables on Ozone........................................................................ 50

Overview of the Ozone Manager in High Availability....................................... 51

Considerations for configuring High Availability on the Ozone Manager........................................................51

Ozone Manager nodes in High Availability...................................................................................................... 51

Read and write requests with Ozone Manager in High Availability.....................................................51

Overview of Storage Container Manager in High Availability..........................52

Considerations for configuring High Availability on Storage Container Manager........................................... 52

Storage Container Manager operations in High Availability............................................................................ 53

Offloading Application Logs to Ozone.................................................................53

Cloudera Runtime | Contents | v

Removing Ozone DataNodes from the cluster.....................................................54

Decommissioning Ozone DataNodes.................................................................................................................54

Placing Ozone DataNodes in offline mode........................................................................................................55

Configuring the number of storage container copies for a DataNode...............................................................55

Recommissioning an Ozone DataNode..............................................................................................................56

Handling datanode disk failure.......................................................................................................................... 56

Multi-Raft configuration for efficient write performances................................ 56

Working with the Recon web user interface....................................................... 57

Access the Recon web user interface................................................................................................................ 57

Elements of the Recon web user interface........................................................................................................ 58

Overview page........................................................................................................................................58

DataNodes page......................................................................................................................................58

Pipelines page......................................................................................................................................... 59

Missing Containers page........................................................................................................................ 60

Configuring Ozone to work with Prometheus.....................................................61

Ozone trash overview.............................................................................................62

Configuring the Ozone trash checkpoint values..................................................62

Cloudera Runtime Upgrading Ozone overview

Upgrading Ozone overview

You must understand the overview of the upgrade feature. By upgrading the CDP Runtime parcels using Cloudera

Manager, Ozone is also upgraded. This Ozone upgrade provides you with new features that are made available with

the 7.1.8 release.

This feature helps you upgrade or downgrade Ozone. Ozone upgrade from Cloudera Manager is managed by

upgrading the CDP parcels. Before upgrading the CDP parcels, as a pre-upgrade step, you must take a backup of OM

metadata and SCM metadata. Ozone will be brought to a read-only state before the upgrade and this helps the OMs to

synchronize before the upgrade. The upgrade is completely managed by Cloudera Manager.

When the new version of Ozone starts, new features are not yet available. This allows you to downgrade to an older

version. In case you wish to downgrade, then the older version of Ozone is restored. However, data written in the

newer version is still readable by the older version of Ozone.

If you wish to finalize the upgrade and enable the new features, you must run the Finalize Upgrade command. This

updates the metadata layout of Ozone services, persists the changes required for the new version, and enables the new

features.

Note: After running the Finalize Upgrade command, it is not possible to downgrade.

Backing up Ozone

You must shutdown the cluster and take the backup of OM and SCM metadata.

About this task

Note: To locate the hostnames required to backup OM and SCM, open the Cloudera Manager Admin

Console, go to the Ozone service, and click the Instances tab.

Procedure

1.

On each OM, copy the directories indicated by the ozone.om.db.dirs and ozone.om.ratis.storage.dir config keys to

the backup location by running the command cp -r <config_directory> <backup_directory>.

2.

On each SCM, copy the directories indicated by the ozone.scm.db.dirs and ozone.scm.ha.ratis.storage.dir

config keys to the backup location by running the command cp -r <config_directory>

<backup_directory>.

Note: You must take a backup of ozone.scm.ha.ratis.storage.dir only if ozone.scm.ratis.enable is set to

true.

Upgrading Ozone parcels

You must upgrade the CDP Runtime parcels which in turn updates Ozone. To complete the upgrade of Ozone

services, you must finalize the upgrade. This allows you to access the latest features available for this release.

Procedure

1.

Using Cloudera Manager, upgrade the CDP parcels. This upgrades Ozone as well.

2.

Click the Finish Upgrade option. In this state you can read and write to ensure that the Ozone cluster is working as

expected. At this stage, you can decide to finalize the upgrade or downgrade to the previous version.

6

Cloudera Runtime Ozone S3 Multitenancy overview (Technical Preview)

3.

Click the Finalize Upgrade option. At this stage, the cluster will be in the read-only state. After sufficient

DataNodes finalize to serve writes, the cluster will leave the read-only state.

Note: After running the Finalize Upgrade command, it is not possible to downgrade.

Ozone S3 Multitenancy overview (Technical Preview)

Apache Ozone now supports the multi-tenancy feature. This feature enables Ozone to compartmentalize the resources

and create multiple tenants.

Technical Preview: This is a technical preview feature and considered under development. Do not use this in your

production systems. To share your feedback, contact Support by logging a case on our Cloudera Support Portal.

Technical preview features are not guaranteed troubleshooting guidance and fixes.

You can access multiple S3-accessible Ozone volumes available over AWS S3 using CLI or APIs. You can control

each of these volumes with Ozone administrator privileges or tenant administrator privileges. You can use Apache

Ranger to control the volume, bucket and key access.

Each tenant by default has a volume assigned. An administrator can provide the volume access to a user. An Access

ID & Secret Key pair is generated for every user to access the volume. An Ozone administrator can then assign one

or more tenant users with the tenant administrator privilege in a tenant, so these tenant administrators can assign and

revoke users from the tenant without involving the Ozone administrators.

Prerequisites to enable S3 Multitenancy

Before you proceed to enabling the feature, you must understand the prerequisites that is required mandatorily.

• To have a secure cluster, you must enable Kerberos Authentication. For more information, see Securing the

cluster using Kerberos.

• You must perform a one-time configuration change in Ranger UserSync to add an om user or short username that

Ozone Manager is using for Kerberos authentication to the ranger.usersync.whitelist.users.role.assignment.rules

configuration.

Note: This would no longer be necessary once Ranger allows service admin users to create, update, and

delete Ranger roles.

• You must have a minimum of one S3 Gateway setup in order to access the tenant buckets with S3 API. For more

information, see Using Ozone S3 gateway.

• You can create additional Ozone policies using Ranger, For more information, see Configure a resource-based

policy.

Enabling S3 Multi-Tenancy

You must perform the following steps to enable the S3 multi-tenancy feature.

Procedure

1.

Log in to Cloudera Manager UI

2.

Navigate to Clusters

3.

Select the Ozone service

4.

Go to Configurations

5.

Search for Enable Ozone S3 Multi-Tenancy and select the checkbox

6.

Click Save Changes

7

Cloudera Runtime Ozone S3 Multitenancy overview (Technical Preview)

7.

Restart the Ozone service

Tenant Commands

After setting up the S3 Multi-Tenancy feature, you can create and list tenants, assign users to tenants and also assign

admin privileges, revoke admin access or even delete a tenant and so on.

The following commands assume that the cluster is Kerberized and Ranger enabled.

Note: If you have enabled Ozone Manager HA on the Ozone service, then you must append --om-

service-id= to the commands.

Creating a tenant

To create a new tenant in the Ozone cluster, you must have cluster admin privileges defined in ozone.administrators

configuration. When you create a tenant, a volume with the same name will be created. However, tenant name and

volume name must be the same and tenant volume cannot be changed after the tenant is created.

To create a new tenant, execute the following command: ozone tenant [--verbose] create <TENANT_NAME>

Listing a tenant

To list all tenants in an Ozone cluster, execute the following command: ozone tenant list [--json]

Assiging a user to a tenant

Only an Ozone cluster administrator can assign the first user to a tenant. After the first user gets admin privileges, the

first user can create and assign new users. A user can be assigned multiple tenants.

To assign a user to a tenant, execute the following command: ozone tenant [--verbose] user assign <USER_NAME>

--tenant=<TENANT_NAME>

Assigning a user as a tenant admin

Only an Ozone cluster administrator can assign the first user to a tenant along with access key ID/secret pair. After

the first user gets admin privileges, the first user can create and assign new users. A user can be assigned multiple

tenants.

Both delegated and non-delegated tenant admins can assign and revoke tenant users from their tenant. However, only

a delegated admin can assign and revoke the tenant admins from a tenant.

You can be a tenant admin in multiple tenants. However, you will be assigned different access IDs under each tenant.

To assign a user as a tenant admin (the current logged-in user must have Ozone cluster administrator or tenant

delegated administrator privilege), execute the following command:

ozone tenant user assignadmin <ACCESS_ID> [-d|--delegated]

--tenant=<TENANT_NAME>

Listing users in a tenant

To list users in a tenant, execute the following command: ozone tenant user list [--json] <TENANT_NAME>

Getting tenant user info

To get tenant user’s information, execute the following command: ozone tenant user info [--json] <USER_NAME>

8

Cloudera Runtime Multi Protocol Aware System overview

Revoking a tenant admin

To revoke a tenant admin, execute the following command: ozone tenant [--verbose] user revokeadmin <ACCESS_

ID>

Revoking user access from a tenant

To revoke the user access from a tenant, execute the following command:

ozone tenant [--verbose] user revoke <ACCESS_ID>

Deleting a tenant

The tenant must be empty and all admin user access revoked before deleting a tenant. This is a safety design to ensure

that even if a tenant is deleted, the volume created for the tenant is intact.

To delete a tenant, execute the following command: ozone tenant [--verbose] delete <TENANT_NAME>

Note: After a successful tenant delete command, the tenant information is removed from the Ozone Manager

database and default tenant policies are removed from Ranger, but the volume itself along with its data is not

removed. An admin can delete a volume manually using CLI.

Multi Protocol Aware System overview

The overview helps you to understand Ozone file system support, differences between flat namespace and

hierarchical namespace, different bucket layouts, and their use cases.

Ozone natively provides Amazon S3 and Hadoop Filesystem compatible endpoints and is designed to work

seamlessly with enterprise scale Data Warehousing, Batch Analytics, Machine Learning, Streaming Workloads, and

so on. The prominent use cases based on the integration with storage service are mentioned below:

• Ozone as a pure S3 object store semantics

• Ozone as a replacement filesystem for HDFS to solve the scalability issues

• Ozone as a Hadoop Compatible File System (HCFS) with limited S3 compatibility. For example, for key paths

with “/” in it, intermediate directories will be created.

• Multiprotocol access - Interoperability of the same data for various workloads.

Upgrading this feature from older Ozone version to 7.1.8

You must first upgrade Ozone and perform the pre and post finalization steps to use this feature.

Procedure

1.

Pre-Finalization Phase: You can only create buckets with a LEGACY layout. If any client (old or new) tries to

create a new bucket with OBJECT_STORE or FILE_SYSTEM_OPTIMIZED layout, this request is blocked.

2.

Post-Finalization Phase:

a) New Clients: Full Bucket Layout feature is available.

b) Old Clients: You cannot interact with any buckets that are not in LEGACY layout. This means they cannot

talk to FSO or OBS buckets. Ozone displays the UNSUPPORTED_OPERATION exception in all such cases.

For example, attempts to create directories and keys, list status, read bucket info, and so on will also display an

UNSUPPORTED_OPERATION exception

9

Cloudera Runtime Multi Protocol Aware System overview

Files and Objects together

Bucket Layout concept is now introduced in Ozone that helps you with the unified design representing files,

directories, and objects stored in a single system.

A single unified design represents files, directories, and objects stored in a single system. Ozone performs this by

introducing the bucket layout concept in the metadata namespace server. With this, a single Ozone cluster with the

capabilities of both Hadoop Compatible File System (HCFS) and Object Store (like Amazon S3) features by storing

files, directories, objects and buckets efficiently. Also, the same data can be accessed using various protocols.

Bucket Layout

Apache Ozone now supports bucket layout feature. This helps you in categorising different Ozone buckets like FSO,

OBS, and Legacy.

Apache Ozone object store now supports a multi-protocol aware Bucket Layout. The purpose is to categorize Ozone

Bucket based on the prominent use cases:

• FILE_SYSTEM_OPTIMIZED (FSO) Bucket

• Hierarchical FileSystem namespace view with directories and files similar to HDFS.

• Provides high performance namespace metadata operations similar to HDFS.

• Provides capabilities to read/write using Amazon S3.

• OBJECT_STORE (OBS) Bucket - Provides a flat namespace (key-value) similar to Amazon S3.

• LEGACY Bucket - Represents existing pre-created buckets for smooth upgrades from previous Ozone version to

the new Ozone version

10

Cloudera Runtime Multi Protocol Aware System overview

You can create FSO/OBS/LEGACY buckets using following shell commands. You can specify the bucket type in the

layout parameter.

• $ ozone sh bucket create --layout FILE_SYSTEM_OPTIMIZED /s3v/fso-bucket

• $ ozone sh bucket create --layout OBJECT_STORE /s3v/obs-bucket

• $ ozone sh bucket create --layout LEGACY /s3v/bucket

This table explains the differences between Bucket Type and Client Interface

Bucket Type S3 Compatible Interface ofs o3fs (Deprecated, not

recommended)

URL Scheme: http://

bucket.host:9878/

URL Scheme: ofs://om-id/

volume/bucket/key

URL Scheme: o3fs://

bucket.volume.om-id/key

FSO Supports Read, Write, and

Delete operations

Supports Read, Write, and

Delete operations

Supports Read, Write, and

Delete operations

OBS Supports Read, Write, and

Delete operations

Unsupported Unsupported

11

Cloudera Runtime Multi Protocol Aware System overview

Note: FSO and OBS are accessible only on CDP Private Cloud 7.1.8 onwards.

Ozone FS namespace optimization with prefix

Ozone now supports FS namespace optimization with prefix that provides atomicity and consistency in renaming

and deleting files and subdirectories under a directory. Ozone now handles partial failures and performance is now

deterministic.

FSO feature helps in performing the rename or delete metadata operations for the directories which have large

sub-trees or sub-paths. With this feature, Ozone handles partial failures and provides atomicity and consistency in

renaming and deleting each and every file and subdirectory under a directory. Performance is deterministic now and

is similar to HDFS, especially for the delete and rename metadata operations, and if you are running the Spark or

Hive like big data queries.

Highlights of this feature:

• Provide an efficient Hierarchical FileSystem Namespace view with intermediate directories similar to HDFS.

• Support for Atomic Rename and Deletes. This helps Hive, Impala, and Spark for job and task commits.

• Strong consistency guarantees without any partial results in case of directory rename or delete failures.

• Rename, move, and recursive directory delete operations should have deterministic performance numbers

irrespective of the large set of subpaths (directories/files) contained within it.

Changes you can observe by using this feature:

• Apache Hive drop table query, recursive directory deletion, and directory moving operations becomes faster and

consistent without any partial results in case of any failure.

• Dropping a managed Impala table should be efficient without requiring O(n) RPC calls where n is the number of

file system objects for the table.

• Job Committers of Hive, Impala, and Spark often rename their temporary output files to a final output location

at the end of the job. The performance of the job is directly impacted by how quickly the rename operation is

completed.

• ACL support through Apache Ranger.

• For more information on understanding the performance capabilities between Apache Ozone and S3 API and how

to natively integrate workloads, see High Performance Object Store for CDP Private Cloud and High Performance

Object Store for CDP Private Cloud.

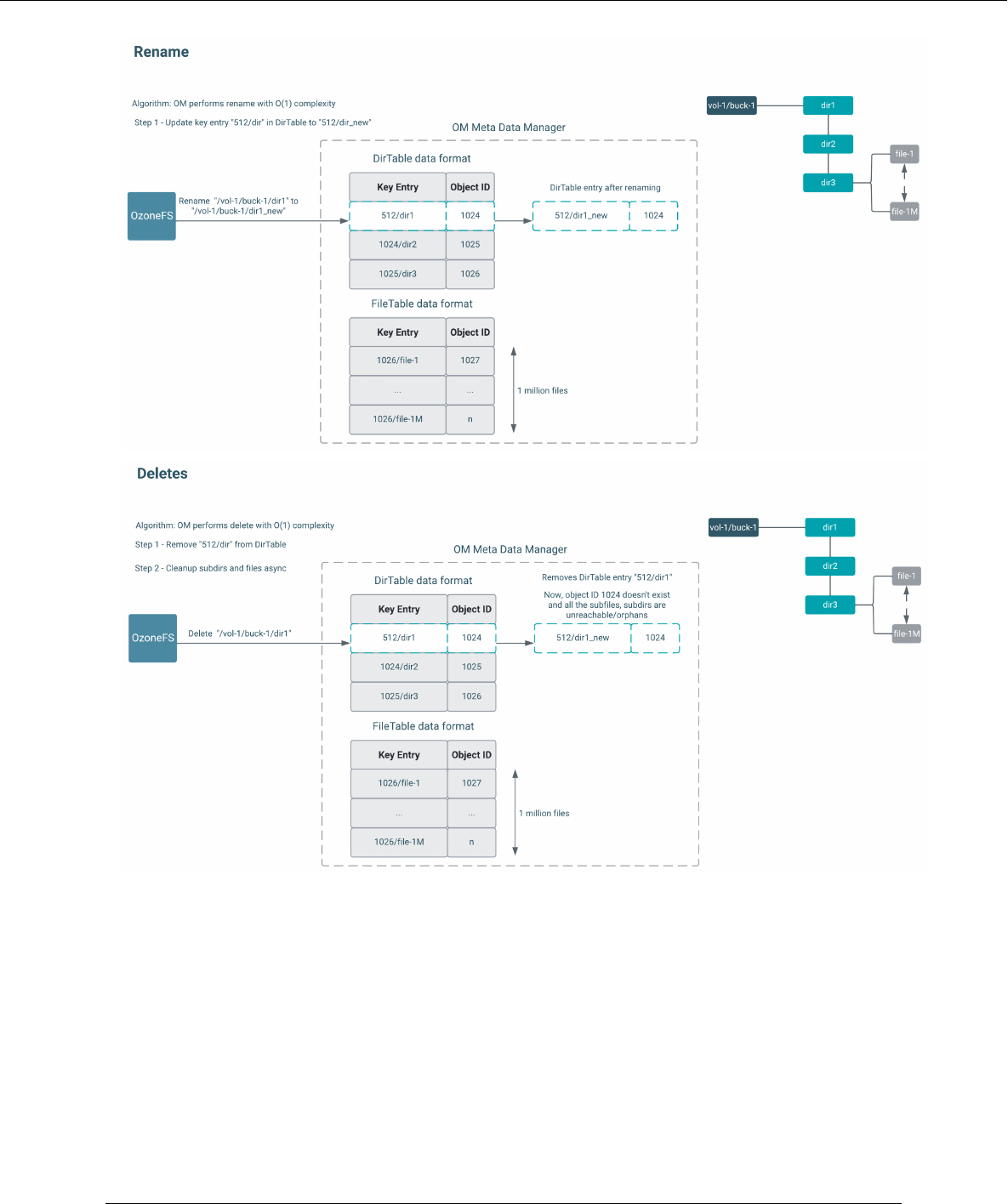

Metadata layout format

In the File System Optimized (FSO) buckets, OM metadata format stores intermediate directories into DirectoryTable

and files into FileTable as shown in the below picture. The key to the table is the name of a directory or a file prefixed

by the unique identifier of its parent directory <parent unique-id>/<filename>

12

Cloudera Runtime Multi Protocol Aware System overview

Delete and Rename Operation

Currently, in the Legacy Ozone file system to delete or rename dir 1, you have to delete or rename dir 1 in all the

rows. This is expensive, time consuming, and not scalable.

Now, there is an object ID allocated for every path created. Deleting or renaming dir 1 now updates all rows based on

the Object ID. The images below explain how rename and delete operations work.

13

Cloudera Runtime Multi Protocol Aware System overview

Interoperability Between S3 and FS APIs

FSO Bucket Layout supports interoperability of data for various use cases. For example, You can create an FSO

Bucket type and ingest data into Apache Ozone using FileSystem API. The same data can be accessed through the

Ozone S3 API (Amazon S3 implementation of the S3 API protocol) and vice versa.

Multi-protocol client access - read/write operations using Ozone S3 and Ozone FS client.

14

Cloudera Runtime Multi Protocol Aware System overview

OBS as Pure Object Store

OBS is the existing Ozone Manager metadata format which stores key entries with full path names, where the

common prefix paths will be duplicated for keys like shown in the below diagram.

Configuration to create bucket with default layout

You must set the following configuration in Cloudera Manager to create a bucket with default layout.

About this task

In Cloudera Manager, you must configure ozone-site.xml to define the default value for bucket layout during

bucket creation if the client has not specified the bucket layout argument. Supported values are OBJECT_STORE,

FILE_SYSTEM_OPTIMIZED, and LEGACY.

By default, this config value is empty. Ozone will default to LEGACY bucket layout if it finds an empty config value.

You must add the below property and provide the value.

15

Cloudera Runtime Multi Protocol Aware System overview

Procedure

1.

Log in to Cloudera Manager UI

2.

Navigate to Clusters

3.

Select the Ozone service

4.

Go to Configurations

5.

Search for Ozone Manager Advanced Configuration Snippet (Safety Valve) for ozone-conf/ozone-site.xml

a) Click Add

b) Click View as XML

c) Copy and paste: <property> <name>ozone.default.bucket.layout</name> <value/> </property>

d) Click View Editor. You must provide the values for the properties.

Property name Value

ozone.default.bucket.layout

Name of the property. By default, Legacy is the

bucket type.

Value Supported values are OBJECT_STORE or

FILE_SYSTEM_OPTIMIZED or LEGACY

Description Enter the Description for the property

e) Click Save Changes

f) Restart the Ozone service

Performing Bucket Layout operations in Apache Ozone using CLI

Run the below commands to get an understanding of the basic operations like create, list, move, read, write, and

delete

Procedure

1.

Creating FSO and OBS buckets using Ozone Shell:

a) ozone sh bucket create --layout FILE_SYSTEM_OPTIMIZED /s3v/fso-bucket

b) ozone sh bucket create --layout OBJECT_STORE /s3v/obs-bucket

2.

Bucket Info:

a) ozone sh bucket info /s3v/fso-bucket

b) ozone sh bucket info /s3v/obs-bucket

FSO operations

You can run the following commands for performing FSO operations.

Procedure

1.

Creating directories inside FSO buckets:

a) ozone fs -mkdir -p o3fs://fso-bucket.s3v.ozone1/dir1/dir2/dir3/

b) ozone fs -mkdir -p o3fs://fso-bucket.s3v.ozone1//aa///bb//cc///

In FSO, bucket paths will be normalized

2.

Listing FSO bucket ozone fs -ls -R o3fs://fso-bucket.s3v.ozone1/

3.

Creating some files inside FSO buckets

a) ozone fs -touch o3fs://fso-bucket.s3v.ozone1/dir1/dir2/dir3/file1

b) ozone fs -touch o3fs://fso-bucket.s3v.ozone1/dir1/dir2/dir3/file2

c) ozone fs -touch o3fs://fso-bucket.s3v.ozone1/aa/bb/cc/abc_file3

16

Cloudera Runtime Multi Protocol Aware System overview

4.

Listing FSO bucket ozone fs -ls -R o3fs://fso-bucket.s3v.ozone1/

5.

Move Command on a file, which internally does a renaming operation ozone fs -mv o3fs://fso-

bucket.s3v.ozone1/dir1/dir2/dir3/file2 o3fs://fso-bucket.s3v.ozone1/dir1/

dir2/

6.

Move Command on a directory, which internally does a renaming operation ozone fs -mv o3fs://fso-

bucket.s3v.ozone1/aa/ o3fs://fso-bucket.s3v.ozone1/new_aa

7.

Listing FSO bucket ozone fs -ls -R o3fs://fso-bucket.s3v.ozone1/

Multi Protocol Access operations using AWS Client

You can run the following commands to run multi protocol access operations using the AWS client.

Procedure

1.

Writing a new file to FSO bucket

a) aws s3 cp --endpoint-url http://0.0.0.0:9878 ozone.txt s3://fso-bucket/

dir1/dir2/dir3/awsfile1

b) aws s3 cp --endpoint-url http://0.0.0.0:9878 ozone.txt s3://fso-bucket/

dir1/dir2/dir3/awsfile2

2.

Reading from Ozone Bucket

a) aws s3api --endpoint http://0.0.0.0:9878 get-object --bucket fso-bucket --

key /dir1/dir2/dir3/awsfile1 ./ozone_doc

b) cat ./ozone_doc

3.

Listing Bucket Objects aws s3api --endpoint-url http://0.0.0.0:9878 list-objects --

bucket fso-bucket

4.

Deleting a file aws s3 rm --endpoint-url http://0.0.0.0:9878 s3://fso-bucket/dir1/

dir2/dir3/awsfile1

5.

Following operations using Ozone FS commands

a) Listing directory ozone fs -ls -R ofs://ozone1/s3v/fso-bucket/

b) Displaying the content of file ozone fs -cat ofs://ozone1/s3v/fso-bucket/dir1/dir2/

dir3/awsfile2

6.

Following operations using Ozone Shell commands

a) Listing Keys ozone sh key list /s3v/fso-bucket/

7.

Following operations using Ozone FS commands

a) Deleting a file ozone fs -rm -skipTrash ofs://ozone1/s3v/fso-bucket/dir1/dir2/

dir3/awsfile2

b) Deleting a directory ozone fs -rm -R -skipTrash ofs://ozone1/s3v/fso-bucket/dir1/

c) Listing directories (dir1 should not exist) ozone fs -ls -R ofs://ozone1/s3v/fso-bucket/

Object Store operations using AWS client

You can run the following commands for performing OBS operations using AWS Client.

Procedure

1.

Creating a bucket aws s3api --endpoint-url http://0.0.0.0:9878 create-bucket --

bucket=obs-s3bucket

2.

Bucket Info ozone sh bucket info /s3v/obs-s3bucket

3.

Writing a file to bucket

a) aws s3 cp --endpoint-url http://0.0.0.0:9878 ozone.txt s3://obs-s3bucket/

dir1/dir2/dir3/##awsfile1

b) aws s3 cp --endpoint-url http://0.0.0.0:9878 ozone.txt s3://obs-s3bucket/

dir1/dir2/dir3/##awsfile2

17

Cloudera Runtime Ozone Ranger Integration

4.

Reading the above file from bucket

a) rm -rf /tmp/sample.txt

b) ozone sh key get /s3v/obs-s3bucket/dir1/dir2/dir3/##awsfile1 /tmp/

sample.txt

c) cat /tmp/sample.txt

5.

Listing bucket object aws s3api --endpoint-url http://0.0.0.0:9878 list-objects --

bucket obs-s3bucket

6.

Deleting a key aws s3 rm --endpoint-url http://0.0.0.0:9878 s3://obs-s3bucket/

dir1/dir2/dir3/awsfile1

Object Store operations using Ozone shell command

You can run the following commands for performing OBS operations using Ozone shell command.

Procedure

1.

Writing a key to bucket ozone sh key put /s3v/obs-s3bucket/bb/cc/dd/file.txt

ozone.txt

2.

Reading key from bucket

a) rm -rf /tmp/sample.txt

b) ozone sh key get /s3v/obs-s3bucket/bb/cc/dd/file.txt /tmp/sample.txt

c) cat /tmp/sample.txt

3.

Deleting a key ozone sh key delete /s3v/obs-s3bucket/bb/cc/dd/file.txt

4.

Listing a key ozone sh key list /s3v/obs-s3bucket/

Ozone Ranger policy

Using the Ozone Ranger policy integration, you can set new Ozone Ranger policies.

Procedure

1.

Ranger permissions for OBJECT_STORE buckets

a) You must configure policy on a resource key path

b) Wild-Card: Keys starting with key path keyRoot/key*

2.

Ranger permissions for FILE_SYSTEM_OPTIMIZED buckets

a) Configure policy on resource path, which can be at the level of a specific file or directory path component.

b) Wild Cards: You can configure a policy for all sub-directories or sub-files using wildcards. For example, /root/

app*. For more information, refer performance optimized authorization approach for rename and recursive

delete operations in the Ranger Ozone plugin.

Note: For more information on setting Ozone Ranger policies, see Ranger Ozone Integration.

Ozone Ranger Integration

Set up policies in Ranger for the users to have the right access permissions to the various Ozone objects such as

buckets and volumes.

When using Ranger to provide a particular user with read/write permissions to a specific bucket, you must configure a

separate policy for the user to have read access to the volume in addition to policies configured for the bucket.

18

Cloudera Runtime Ozone Ranger Integration

Configuring a resource-based policy using Ranger

Using Ranger, you can setup new Ozone policies that will help you to set right access permissions to various Ozone

objects like volumes and buckets.

About this task

Through configuration, Apache Ranger enables both Ranger policies and Ozone permissions to be checked for a

user request. When the Ozone Manager receives a user request, the Ranger plugin checks for policies set through the

Ranger Service Manager. If there are no policies, the Ranger plugin checks for permissions set in Ozone.

Cloudera recommends you to create permissions at the Ranger Service Manager, and to have restrictive permissions

at the Ozone level.

Procedure

1.

On the Service Manager page, select an existing Ozone service. The List of Policies page appears.

2.

Click Add New Policy. The Create Policy page appears.

3.

Complete the Create Policy page as follows:

Field Description

Policy Name

Enter a unique name for this policy. The name cannot

be duplicated anywhere in the system.

Normal or Override

Enables you to specify an override policy. When

override is selected, the access permissions in the

policy override the access permissions in existing

policies. This feature can be used with Add Validity

Period to create temporary access policies that override

existing policies.

Add Validity Period

Specify a start and end time for the policy.

Policy Label

(Optional) Specify a label for this policy. You can

search reports and filter policies based on these labels.

Ozone Volume

Specify volumes that can be accessed. Ensure that the

Ozone volume key is set to Include.

19

Cloudera Runtime Ozone Ranger Integration

Field Description

If you want to deny access at the volume level, then

disable this option by turning off using the Ozone

Volume key to Exclude.

Bucket

Specify buckets that can be accessed. Ensure that the

Ozone Bucket key is set to Include.

If you want to deny access at the bucket level, then

disable this option by turning off using the bucket key.

Or, select None from the Bucket drop-down.

Key

Provide the Key

Recursive or Non Recursive

Recursive or Non recursive function

Description

(Optional) Describe the purpose of the policy.

Audit Logging

Specify whether this policy is audited. To disable

auditing, turn off the Audit Logging key.

4.

Allow Conditions

Label Description

Select Role

Specify the roles to which this policy applies.

To designate a role as an Administrator, select the

Delegate Admin check box. Administrators can edit

or delete the policy, and can also create child policies

based on the original policy.

Select Group

Specify the groups to which this policy applies.

To designate a group as an Administrator, select the

Delegate Admin check box. Administrators can edit

or delete the policy, and can also create child policies

based on the original policy.

The public group contains all users, so granting access

to the public group grants access to all users.

Select User

Specify the users to which this policy applies.

To designate a user as an Administrator, select the

Delegate Admin check box. Administrators can edit

or delete the policy, and can also create child policies

based on the original policy.

Policy Conditions

Provide the IP address range

Permissions

Add or edit permissions: All, Read, Write,, Create,

List, Delete, Read_ACL, Write_ACL, Select/Deselect

All.

Delegate Admin

You can use Delegate Admin to assign administrator

privileges to the roles, groups, or users specified in the

policy. Administrators can edit or delete the policy,

20

Cloudera Runtime Erasure Coding overview

Label Description

and can also create child policies based on the original

policy.

Note: You can use the Plus (+) symbol to add additional conditions. Conditions are evaluated in the order

listed in the policy. The condition at the top of the list is applied first, then the second, then the third,

and so on. Similarly, you can also exclude certain Allow Conditions by adding them to the Exclude from

Allow Conditions list.

5.

You can use the Deny All Other Accesses toggle key to deny access to all other users, groups, and roles other than

those specified in the allow conditions for the policy.

6.

If you wish to deny access to a few or specific users, groups, or roles, then use must set Deny Conditions.

You can use the Plus (+) symbol to add deny conditions. Conditions are evaluated in the order listed in the policy.

The condition at the top of the list is applied first, then the second, then the third, and so on. Similarly, you can

also exclude certain Deny Conditions by adding them to the Exclude from Deny Conditions list.

7.

Click Add.

Erasure Coding overview

The Ozone Erasure Coding feature provides data durability and fault-tolerance along with reduced storage space and

ensures data durability similar to Ratis THREE replication approach.

The Ozone default replication scheme Ratis THREE has 200% overhead storage space including other resources.

Using EC in place of replication helps in reducing storage cost as the overhead storage space is only 50%. For

example, if you replicate 6 blocks of data, you need 18 blocks of disk space in Ratis. However, if you use EC with

Ozone, you need 6 blocks plus 3 parity totalling to 9 blocks of disk space.

Write and read using EC

When a client requests write, OM allocates a block group (data and parity) number of nodes from the pipeline to the

client. Client writes d number of chunks to d number of nodes. Parity chunks(p) are created and transferred to the

remaining p number of nodes. After this process is completed, the client can request for a new block group after the

writing of the current block group is finished.

For reads, OM provides the node location information. If the key is erasure coded, the client reads the data in the EC

way.

Note:

• Cloudera recommends you to use the Erasure Coding feature at the bucket level so that EC is applied on

all the keys created in a bucket. However, you can configure EC at key level as well.

• If you do not want EC to be configured for specific keys, you can explicitly specify the replication

configuration for those keys.

• If replication configuration is not defined specifically for both buckets and keys, then cluster-wide or

global default configuration is applied.

Enabling EC replication configuration cluster-wide

You can set cluster-wide default Replication configuration with EC by using the configuration keys

ozone.server.default.replication.type and ozone.server.default.replication.

Procedure

1.

Log in to Cloudera Manager UI

2.

Navigate to Clusters

21

Cloudera Runtime Erasure Coding overview

3.

Select the Ozone service

4.

Go to Configurations

5.

Search for ozone.server.default.replication and ozone.server.default.replication.type

a) Click Add

b) Click View as XML

c) For ozone.server.default.replication property, copy and paste: <property> <name>ozone.server.default.re

plication</name> <value>RS-X-Y-1024k</value> </property>

Note: RS-X-Y-1024k is an example where RS is the codec type, X is the number of data blocks, Y is

the parity and 1024k is the size of the EC chunk size. For example, if you have 6 data blocks of 1024k

size and you need 3 parity blocks, this is the value RS-6-3-1024k

d) For ozone.server.default.replication.type property, copy and paste: <property> <name>ozone.server.de

fault.replication.type</name> <value>EC</value> </property>

e) Click View Editor. You must provide the values for the properties

Property Value

ozone.server.default.replication

Supported EC options are RS-3-2-1024K,

RS-6-3-1024K, and RS-10-4-1024K

ozone.server.default.replication.type EC

f) Click Save Changes

g) Restart the Ozone service

Enabling EC replication configuration on bucket

You can enable EC replication configuration at bucket level.

Procedure

1.

You can set the bucket level EC Replication configuration through CLI by executing the command ozone sh

bucket create <bucket path> --type EC --replication rs-6-3-1024k

2.

To reset the EC Replication configuration, execute the following command ozone sh bucket set-

replication-config <bucket path> --type EC --replication rs-3-2-1024k

Note:

• The new configuration applies only to the keys created after resetting the EC Replication

configuration. Keys created before resetting the EC Replication configuration will have the older

configuration.

• If you set EC Replication configuration on RATIS (while writing at a bucket level) and you are using

Ozone File System (ofs/o3fs), there is a timeout of 10 minutes where you will continue to write on

RATIS as a caching process in place at the bucket level. For fresh buckets, if you set EC Replication

configuration on RATIS, the new configuration is immediately available for the bucket. However, for

ofs/o3fs/s3 you can only use bucket level settings as you cannot allow EC setting on key creation.

Enabling EC replication configuration on keys or files

You can enable EC configuration replication at key level.

Procedure

You can set the key level EC Replication configuration command through CLI while creating the keys irrespective of

bucket Replication configuration ozone sh key put <Ozone Key Object Path> <Local File> --

type EC --replication rs-6-3-1024k

22

Cloudera Runtime Container Balancer overview

Note: If you have already configured the default EC Replication configuration for a bucket, you do not have

to configure the EC Replication configuration while creating a key.

Container Balancer overview

Container balancer is a service in Storage Container Manager that balances the utilization of datanodes in an Ozone

cluster.

A cluster is considered balanced if for each datanode, the utilization of the datanode (used space to capacity ratio)

differs from the utilization of the cluster (used space to capacity ratio of the entire cluster) no more than the threshold.

This service balances the cluster by moving containers among over-utilized and under-utilized datanodes.

Note:

1.

Container balancer has a command line interface for administrators. You can run the ozone admin

containerbalancer -h help command for commands related to Container Balancer.

2.

Container balancer supports only closed Ratis containers. Erasure Coded containers are not supported yet.

Container balancer CLI commands

You can run the following commands in the cluster.

• To start the service, run the following command ozone admin containerbalancer start

• To stop the service, run the following command ozone admin containerbalancer stop

• To check the status of the service, run the following command ozone admin containerbalancer

status

Configuring container balancer service

To use container balancer using Cloudera Manager, perform the following steps.

Procedure

1.

Log in to Cloudera Manager UI

2.

Navigate to Clusters

3.

Select the Ozone service

4.

Go to Configurations

23

Cloudera Runtime Container Balancer overview

5.

You can filter configurations for Container Balancer by selecting the Scope as Storage Container Manager or

search for hdds.container.balancer

6.

You can now set the values for the Container Balancer configurations.

Activating container balancer using Cloudera Manager

You can activate and deactivate container balancer feature using Cloudera Manager. Perform the following steps to

activate or deactivate the feature.

24

Cloudera Runtime Managing storage elements by using the command-line interface

Activating container balancer through Cloudera Manager

1.

Log in to Cloudera Manager UI

2.

Navigate to Clusters

3.

Select the Ozone service

4.

Click Actions

5.

Click Activate Container Balancer

Deactivating container balancer through Cloudera Manager

1.

Log in to Cloudera Manager UI

2.

Navigate to Clusters

3.

Select the Ozone service

4.

Click Actions

5.

Click Deactivate Container Balancer

Managing storage elements by using the command-line

interface

The Ozone shell is the primary command line interface for managing storage elements such as volumes, buckets, and

keys.

Note: Ensure that the valid length for bucket or volume name is 3-63 characters.

For more information about the various Ozone command-line tools and the Ozone shell, see https://

hadoop.apache.org/ozone/docs/1.0.0/interface/cli.html.

Commands for managing volumes

Depending on whether you are an administrator or an individual user, the Ozone shell commands enable you to

create, delete, view, list, and update volumes. Before running these commands, you must have configured the Ozone

Service ID for your cluster from the Configuration tab of the Ozone service on Cloudera Manager.

Creating a volume

Only an administrator can create a volume and assign it to a user. You must assign administrator privileges to users

before they can create volumes. For more information, see Assigning administrator privileges to users.

Command Syntax

ozone sh volume create --quota=<volu

mecapacity> --user=<username> URI

Purpose Creates a volume and assigns it to a user.

25

Cloudera Runtime Managing storage elements by using the command-line interface

Arguments

• -q, quota: Specifies the maximum size the volume can occupy in

the cluster. This is an optional parameter.

• -u, user: The name of the user who can use the volume. The

designated user can create buckets and keys inside the particular

volume. This is a mandatory parameter.

• URI: The name of the volume to create in the <prefix>://<Service

ID>/<volumename> format.

Example

ozone sh volume create --quota=2TB -

-user=usr1 o3://ozone1/vol1

This command creates a 2-TB volume named vol1 for user usr1. Here,

ozone1 is the Ozone Service ID.

Deleting a volume

Command Syntax

ozone sh volume delete URI

Purpose Deletes the specified volume, which must be empty.

Arguments URI: The name of the volume to delete in the <prefix>://<Service ID>/

<volumename> format.

Example

ozone sh volume delete o3://ozone1/v

ol2

This command deletes the empty volume vol2. Here, ozone1 is the

Ozone Service ID.

Viewing volume information

Command Syntax

ozone sh volume info URI

Purpose Provides information about the specified volume.

Arguments URI: The name of the volume whose details you want to view, in the

<prefix>://<Service ID>/<volumename> format.

Example

ozone sh volume info o3://ozone1/

vol3

This command provides information about the volume vol3. Here,

ozone1 is the Ozone Service ID.

Listing volumes

Command Syntax

ozone sh volume list --user <usernam

e> URI

Purpose Lists all the volumes owned by the specified user.

26

Cloudera Runtime Managing storage elements by using the command-line interface

Arguments

• -u, user: The name of the user whose volumes you want to list.

• URI: The Service ID of the cluster in the <prefix>://<Service ID>/

format.

Example

ozone sh volume list --user usr2 o3:

//ozone1/

This command lists the volumes owned by user usr2. Here, ozone1 is

the Ozone Service ID.

Updating a volume

Command Syntax

ozone sh volume setquota --namespace

-quota <namespacecapacity> --space-q

uota <volumecapacity> URI

Purpose Updates the quota of the specific volume.

Arguments

• --namespace-quota <namespacecapacity>: Specifies the maximum

number of buckets this volume can have.

• --space-quota <volumecapacity>: Specifies the maximum size the

volume can occupy in the cluster.

• URI: The name of the volume to update in the <prefix>://<Service

ID>/<volumename> format.

Example

ozone sh volume setquota --namespace

-quota 1000 --space-quota 10GB /volu

me1

This command sets volume1 namespace quota to 1000 and space quota

to 10GB.

Assigning administrator privileges to users

You must assign administrator privileges to users before they can create Ozone volumes. You can use Cloudera

Manager to assign the administrative privileges.

About this task

Procedure

1.

On Cloudera Manager, go to the Ozone service.

2.

Click the Configuration tab.

3.

Search for the Ozone Service Advanced Configuration Snippet (Safety Valve) for ozone-conf/ozone-site.xml

property.

Specify values for the selected properties as follows:

• Name: Enter ozone.administrators.

• Value: Enter the ID of the user that you want as an administrator. In case of multiple users, specify a comma-

separated list of users.

• Description: Specify a description for the property. This is an optional value.

4.

Enter a Reason for Change, and then click Save Changes to commit the change.

27

Cloudera Runtime Managing storage elements by using the command-line interface

Commands for managing buckets

The Ozone shell commands enable you to create, delete, view, and list buckets. Before running these commands,

you must have configured the Ozone Service ID for your cluster from the Configuration tab of the Ozone service on

Cloudera Manager.

Note: Ensure that the valid length for bucket or volume name is 3-63 characters.

Creating a bucket

Command Syntax

ozone sh bucket create URI

Purpose Creates a bucket in the specified volume.

Arguments URI: The name of the bucket to create in the <prefix>://<Service ID>/

<volumename>/<bucketname> format.

Example

ozone sh bucket create o3://ozone1/v

ol1/buck1

This command creates a bucket buck1 in the volume vol1. Here,

ozone1 is the Ozone Service ID.

Deleting a bucket

Command Syntax

ozone sh bucket delete URI

Purpose Deletes the specified bucket, which must be empty.

Arguments URI: The name of the bucket to delete in the <prefix>://<Service ID>/

<volumename>/<bucketname> format.

Example

ozone sh bucket create o3://ozone1/v

ol1/buck2

This command deletes the empty bucket buck2. Here, ozone1 is the

Ozone Service ID.

Viewing bucket information

Command Syntax

ozone sh bucket info URI

Purpose Provides information about the specified bucket.

Arguments URI: The name of the bucket whose details you want to view, in the

<prefix>://<Service ID>/<volumename>/<bucketname> format.

Example

ozone sh bucket info o3://ozone1/vol

1/buck3

This command provides information about bucket buck3. Here, ozone1

is the Ozone Service ID.

28

Cloudera Runtime Managing storage elements by using the command-line interface

Listing buckets

Command Syntax

ozone sh bucket list URI --length=<n

umber_of_buckets> --prefix=<bucket_p

refix> --start=<starting_bucket>

Purpose Lists all the buckets in a specified volume.

Arguments

• -l, length: Specifies the maximum number of results to return. The

default is 100.

• -p, prefix: Lists bucket names that match the specified prefix.

• -s, start: Returns results starting with the bucket after the specified

value.

• URI: The name of the volume whose buckets you want to list, in

the <prefix>://<Service ID>/<volumename>/ format.

Example

ozone sh bucket list o3://ozone1/vol

2 --length=100 --prefix=buck --start

=buck

This command lists 100 buckets belonging to volume vol2 and names

starting with the prefix buck. Here, ozone1 is the Ozone Service ID.

Commands for managing keys

The Ozone shell commands enable you to upload, download, view, delete, and list keys. Before running these

commands, you must have configured the Ozone Service ID for your cluster from the Configuration tab of the Ozone

service on Cloudera Manager.

Downloading a key from a bucket

Command Syntax

ozone sh key get URI <local_file

name>

Purpose Downloads the specified key from a bucket in the Ozone cluster to the

local file system.

Arguments

• URI: The name of the key to download in the <prefix>://<Service

ID>/<volumename>/<bucketname>/<keyname> format.

• filename: The name of the file to which you want to write the key.

Example

ozone sh key get o3://ozone1/hive/ju

n/sales.orc sales_jun.orc

This command downloads the sales.orc file from the /hive/jun bucket

and writes to the sales_jun.orc file present in the local file system.

Here, ozone1 is the Ozone Service ID.

Uploading a key to a bucket

Command Syntax

ozone sh key put URI <filename>

Purpose Uploads a file from the local file system to the specified bucket in the

Ozone cluster.

29

Cloudera Runtime Managing storage elements by using the command-line interface

Arguments

• URI: The name of the key to upload in the <prefix>://<Service

ID>/<volumename>/<bucketname>/<keyname> format.

• filename: The name of the local file that you want to upload.

• -r, --replication: The number of copies of the file that you want to

upload.

Example

ozone sh key put o3://ozone1/hive/ye

ar/sales.orc sales_corrected.orc

This command adds the sales_corrected.orc file from the local file

system as key to /hive/year/sales.orc on the Ozone cluster. Here,

ozone1 is the Ozone Service ID.

Deleting a key

Command Syntax

ozone sh key delete URI

Purpose Deletes the specified key from the Ozone cluster.

Arguments URI: The name of the key to delete in the <prefix>://<Service ID>/

<volumename>/<bucketname>/<keyname> format.

Example

ozone sh key delete o3://ozone1/hive

/jun/sales_duplicate.orc

This command deletes the sales_duplicate.orc key. Here, ozone1 is the

Ozone Service ID.

Viewing key information

Command Syntax

ozone sh key info URI

Purpose Provides information about the specified key.

Arguments URI: The name of the key whose details you want to view, in the

<prefix>://<Service ID>/<volumename>/<bucketname>/<keyname>

format.

Example

ozone sh key info o3://ozone1/hive/j

un/sales_jun.orc

This command provides information about the sales_jun.orc key. Here,

ozone1 is the Ozone Service ID.

Listing keys

Command Syntax

ozone sh key list URI --length=<numb

er_of_keys> --prefix=<key_prefix> --

start=<starting_key>

Purpose Lists the keys in a specified bucket.

30

Cloudera Runtime Using Ozone S3 Gateway to work with storage elements

Arguments

• -l, length: Specifies the maximum number of results to return. The

default is 100.

• -p, prefix: Returns keys that match the specified prefix.

• -s, start: Returns results starting with the key after the specified

value.

• URI: The name of the bucket whose keys you want to list, in the

<prefix>://<Service ID>/<volumename>/<bucketname>/ format.

Example

ozone sh key list o3://ozone1/hive/y

ear/ --length=100 --prefix=<key_pref

ix> --start=day1

This command lists 100 keys belonging to the volume /hive/year/ and

names starting with the prefix day, but listed after the value day1. Here,

ozone1 is the Ozone Service ID.

Using Ozone S3 Gateway to work with storage elements

Ozone provides S3 Gateway, a REST interface that is compatible with the Amazon S3 API. You can use S3 Gateway

to work with the Ozone storage elements.

In addition, you can use the Amazon Web Services CLI to use S3 Gateway.

After starting Ozone S3 Gateway, you can access it from the following link:

http://localhost:9878

Note: For the users or client applications that use S3 Gateway to access Ozone buckets on a secure cluster,

Ozone provides the AWS access key ID and AWS secret key. See the Ozone security documentation for more

information.

Configuration to expose buckets under non-default volumes

Ozone S3 Gateway allows access to all the buckets under the default /s3v volume. To access the buckets under a non-

default volume, you must create a symbolic link to that bucket.

Consider a non-default volume /vol1 that has a bucket /bucket1 in the following example:

ozone sh volume create /s3v

ozone sh volume create /vol1

ozone sh bucket create /vol1/bucket1

ozone sh bucket link /vol1/bucket1 /s3v/common-bucket

As shown in the example, you can expose /bucket1 as an s3-compatible /common-bucket bucket through the Ozone

S3 Gateway.

REST endpoints supported on Ozone S3 Gateway

In addition to the GET service operation, Ozone S3 Gateway supports various bucket and object operations that the

Amazon S3 API provides.

The following table lists the supported Amazon S3 operations:

Operations on S3 Gateway

• GET service

31

Cloudera Runtime Using Ozone S3 Gateway to work with storage elements

Bucket operations

• GET Bucket (List Objects) Version 2

• HEAD Bucket

• DELETE Bucket

• PUT Bucket

• Delete multiple objects (POST)

Object operations

• PUT Object

• COPY Object

• GET Object

• DELETE Object

• HEAD Object

• Multipart Upload

Configuring Ozone to work as a pure object store

Depending on your requirement, you can configure Ozone to use the Amazon S3 APIs and perform the various

volume and bucket operations.

About this task

You must modify the ozone.om.enable.filesystem.paths property in ozone-site.xml by using Cloudera Manager to

configure Ozone as an object store.

Procedure

1.

Open the Cloudera Manager Admin Console.

2.

Go to the Ozone service.

3.

Click the Configuration tab.

4.

Select Category > Advanced.

5.

Configure the Ozone Service Advanced Configuration Snippet (Safety Valve) for ozone-conf/ozone-site.xml

property as specified.

• Name: ozone.om.enable.filesystem.paths

• Value: False

6.

Enter a Reason for Change and then click Save Changes.

7.

Restart the Ozone service.

What to do next

You must also configure the client applications accessing Ozone to reflect the Ozone configuration changes. If you

have applications in Spark, Hive or other services interacting with Ozone through the S3A interface, then you must

make specific configuration changes in the applications.

Access Ozone S3 Gateway using the S3A filesystem

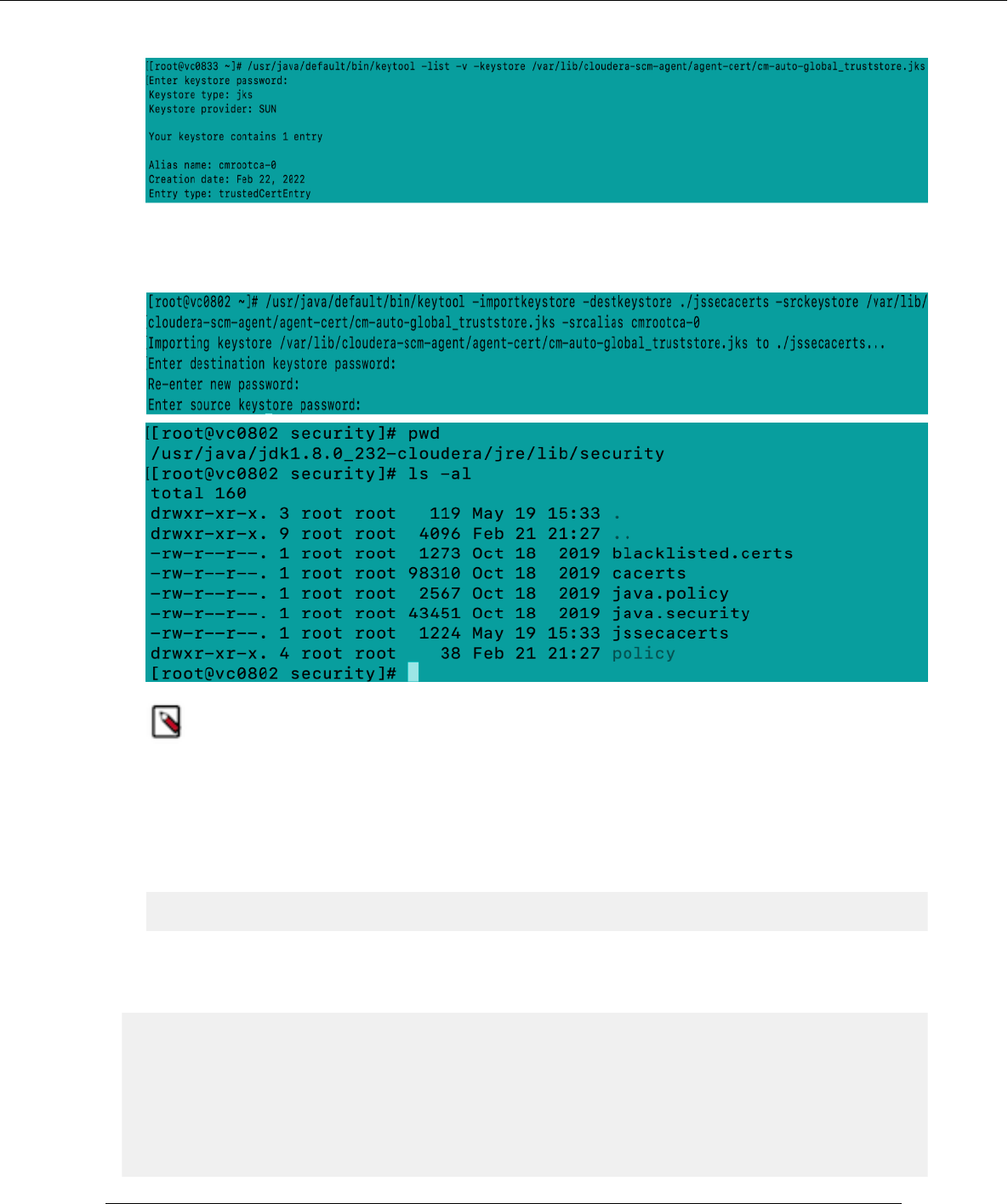

If you want to run Ozone S3 Gateway from the S3A filesystem, you must import the required CA certificate into the

default Java truststore location on all the client nodes for running shell commands or jobs. This is a prerequisite when

the S3 Gateway is configured with TLS.

32

Cloudera Runtime Using Ozone S3 Gateway to work with storage elements

About this task

S3A relies on the hadoop-aws connector, which uses the built-in Java truststore ($JAVA_HOME/jre/lib/security/cace

rts). To override this truststore, you must create another truststore named jssecacerts in the same folder as cacerts on

all the cluster nodes. When using Ozone S3 Gateway, you can import the CA certificate used to set up TLS into cace

rts or jssecacerts on all the client nodes for running shell commands or jobs. Importing the certificate is important

because the CA certificate used to set up TLS is not available in the default Java truststore, while the hadoop-aws

connector library trusts only those certificates that are present in the built-in Java truststore.

Note:

• Ozone S3 Gateway currently does not support ETags and versioning. Therefore, you must disable any

configuration related to them when using S3A with Ozone S3 Gateway.

• S3A is not supported when the File System Optimization (FSO) ozone.om.enable.filesystem.paths is

enabled for Ozone Managers. Note that FSO is enabled by default. Therefore, to use S3A, you must

override or set the ozone.om.enable.filesystem.paths property to false in the Cloudera Manager Clusters

Ozone service Configuration Ozone Service Advanced Configuration Snippet (Safety Valve) for ozone-

conf/ozone-site.xml property. After you save the configuration, restart all Ozone Managers for the

configuration to take affect.

Important: It is recommended that you use ofs:// to denote the Ozone storage path instead of s3a:// wherever

applicable. For example, use ofs://ozone1/vol1/bucket1/dir1/key1 instead of s3a://bucket1/dir1/key1.

Procedure

• Create a truststore named jssecacerts at $JAVA_HOME/jre/lib/security/ on all the cluster nodes configured for S3

Gateway, as specified.

a) Run keytool to view the associated CA certificate and determine the srcalias from the output of the command.

/usr/java/default/bin/keytool -list -v -keys

tore [***ssl.client.truststore.location***]

b) Import the CA certificate to all the hosts configured for S3 Gateway.

/usr/java/default/bin/keytool -importkeystore -destk

eystore $JAVA_HOME/jre/lib/security/jssecacerts -srckeys

tore [***ssl.client.truststore.location***] -srcalias [***alias***]

Accessing Ozone S3 using S3A FileSystem

If the Ozone S3 gateway is configured with TLS (HTTPs), you must import the CA certificate to Java truststore. This

is because the CA certificate that is used to set up TLS is not available in the default Java truststore; however, the

hadoop-aws connector library only trusts the built-in Java truststore certificates.

To override the default Java truststore, create a truststore named jssecacerts in the same directory ($JAVA_HOME/

jre/lib/security/jssecacerts) on all cluster nodes where the user intends to run jobs or shell commands against

Ozone S3. You can find the Ozone S3 gateway truststore location from the ozone-site.xml file which is

normally located in the /etc/ozone/conf.cloudera.OZONE-1 directory. From the ozone-site.xml file, you can find

ssl.client.truststore.location and ssl.client.truststore.password.

List entries in the store

33

Cloudera Runtime Using Ozone S3 Gateway to work with storage elements

• /usr/java/default/bin/keytool -list -v -keystore <<ssl.client.truststore.location>>

From the command output, you can find out the srcalias value which is shown as “Alias name”. In this example,

the “Alias name” is cmrootca-0. Import the CA certificate (In this example, the certificate is imported to

jssecacerts truststore). /usr/java/default/bin/keytool -importkeystore -destkeystore $JAVA_HOME/jre/lib/security/

jssecacerts -srckeystore <<ssl.client.truststore.location>> -srcalias <<alias>>

Note: Depending on the installed JAVA version on your cluster, the jssecacerts truststore directory path

might be different from what the command line and screenshot show.

• Enter the destination password as “changeit” and the source password as it is configured in the cluster.

Ozone S3 currently does not support Etags and versioning because the configuration related to them needs to be

disabled when using S3A filesystem with Ozone S3. You can either pass the Ozone S3 configurations from the

command line or store them in the cluster-wide safety valve in the core-site.xml file.

• Obtain awsAccessKey and awsSecret using the ozone s3 getsecret command

ozone s3 getsecret --om-service-id=<<ozone service id>>

• Ozone S3 properties need to be either passed in from command line or stored as cluster-wide Safety Valve in

core-site.xml file. To do this is, add the Safety Valve to core-site.xml through HDFS configuration from Cloudera

Manager.

fs.s3a.impl org.apache.hadoop.fs.s3a.S3AFileSystem

fs.s3a.access.key <<accessKey>>

fs.s3a.secret.key <<secret>>

fs.s3a.endpoint <<Ozone S3 endpoint Url>>

fs.s3a.bucket.probe 0

fs.s3a.change.detection.version.required false

fs.s3a.change.detection.mode none

fs.s3a.path.style.access true

34

Cloudera Runtime Using Ozone S3 Gateway to work with storage elements

In the configurations, replace <<accessKey>> and <<secret>> with awsAccessKey and awsSecret obtained using the

Ozone S3 getsecret command accordingly and <<Ozone S3 endpoint URL>> with Ozone S3 gateway URL from the

cluster.

If you do not store the Ozone S3 properties as cluster-wide Safety Valve in core-site.xml file, you can pass the

following in from command line:

Create a directory “dir1/dir2” in testbucket:

hadoop fs -Dfs.s3a.bucket.probe=0 -Dfs.s3a.change.detection.version.require

d=false -Dfs.s3a.change.detection.mode=none -Dfs.s3a.access.key=<<accesskey>

> -Dfs.s3a.secret.key=<<secret>> -Dfs.s3a.endpoint=<<s3 endpoint url>> -Dfs.

s3a.path.style.access=true -Dfs.s3a.impl=org.apache.hadoop.fs.s3a.S3AFileSys

tem -mkdir -p s3a://testbucket/dir1/dir2

S3 properties are stored as safety valves in the HDFS core-site.xml file in the following sample shell commands:

• Create a directory “dir1/dir2” in testbucket.

hadoop fs -mkdir -p s3a://testbucket/dir1/dir2

• Place a file named key1 in the “dir1/dir2” directory in testbucket

hadoop fs -put /tmp/key1 s3a://testbucket/dir1/dir2/key1

35

Cloudera Runtime Using Ozone S3 Gateway to work with storage elements

• List files/directories under testbucket

Examples of using the S3A filesystem with Ozone S3 Gateway

You can use the S3A filesystem with Ozone S3 Gateway to perform different Ozone operations.

The following examples show how you can use the S3A filesystem with Ozone.

Note: In the following examples, replace the values of access key and secret from the output of the ozone s3

getsecret --om-service-id=<ozone service id> command and replace the Ozone S3 endpoint URL with the

S3 Gateway URL of the Ozone cluster.

Creating a directory in a bucket

The following example shows how you can create a directory /dir1/dir2 within a bucket named testbucket:

hadoop fs -Dfs.s3a.bucket.probe=0 -Dfs.s3a.change.detection.version.require

d=false -Dfs.s3a.change.detection.mode=none -Dfs.s3a.access.key=<accesskey>

-Dfs.s3a.secret.key=<secret> -Dfs.s3a.endpoint=<s3 endpoint url> -Dfs.s3

a.path.style.access=true -Dfs.s3a.impl=org.apache.hadoop.fs.s3a.S3AFileSyste

m -mkdir -p s3a://testbucket/dir1/dir2

Adding a key to a directory

The following example shows how you can add a key to the dir2 directory created in the previous example:

hadoop fs -Dfs.s3a.bucket.probe=0 -Dfs.s3a.change.detection.version.require

d=false -Dfs.s3a.change.detection.mode=none -Dfs.s3a.access.key=<accesskey>

-Dfs.s3a.secret.key=<secret> -Dfs.s3a.endpoint=<s3 endpoint url> -Dfs.s3

a.path.style.access=true -Dfs.s3a.impl=org.apache.hadoop.fs.s3a.S3AFileSyste

m -put /tmp/key1 s3a://testbucket/dir1/dir2/key1

Listing files or directories in a bucket