Artificial Intelligence

and Algorithms in Risk

Assessment

Addressing Bias,

Discrimination and other Legal

and Ethical Issues

A Handbook

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

2

© European Labour Authority, 2023

Reproduction is authorised provided the source is acknowledged.

For any use or reproduction of photos or other material that is not under the copyright of the European Labour

Authority, permission must be sought directly from the copyright holders.

Neither the European Labour Authority nor any person acting on behalf of the European Labour Authority is

responsible for the use which might be made of the following information.

The present document has been produced by Véronique Bruggeman, Marco Paron Trivellato (Milieu Consulting

SRL), Prof. Raphaële Xenidis and Prof. Benjamin van Giffen as authors. This task has been carried out exclusively

by the authors in the context of a contract between the European Labour Authority and Milieu Consulting SRL,

awarded following a tender procedure. The document has been prepared for the European Labour Authority,

however, it reflects the views of the authors only. The information contained in this report does not reflect the views

or the official position of the European Labour Authority.

The handbook is based on a training session, facilitated by Milieu Consulting, that took place on 26 May 2023 and

was headed by Prof. Raphaële Xenidis and Prof. Benjamin van Giffen.

3

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

Contents

List of tables ........................................................................................................................... 4

List of figures .......................................................................................................................... 4

Abbreviations ......................................................................................................................... 5

Introduction ............................................................................................................................ 6

1.0 Algorithms, automation and AI ..................................................................................... 7

1.1 Defining key concepts ............................................................................................................................ 7

1.2 How do they work? ................................................................................................................................ 8

1.3 The CRISP-DM model ........................................................................................................................... 9

1.4 How can AI and algorithms discriminate? ........................................................................................... 11

2.0 Machine learning bias ................................................................................................. 14

2.1 Overview of machine learning biases .................................................................................................. 14

2.2 Other examples of AI bias ................................................................................................................... 17

3.0 The legal framework .................................................................................................... 19

3.1 Anti-discrimination law: The fundamental right to non-discrimination in Europe ................................. 19

3.2 Applying anti-discrimination law ........................................................................................................... 21

3.2.1 When is bias unlawful discrimination? ....................................................................................... 21

3.2.2 Fairness and bias versus equality and discrimination ............................................................... 25

3.2.3 Gaps in and limitations of legal scope ....................................................................................... 25

3.3 AI Sectoral Regulation. Taking stock of European developments ...................................................... 28

3.3.1 EU AI Act (proposed) ................................................................................................................. 28

3.3.2 AI Liability Directive (proposed) ................................................................................................. 30

3.3.3 Council of Europe Framework Convention on AI ...................................................................... 30

3.4 Interaction with data protection law: taking stock of European developments .................................... 31

3.4.1 EU General Data Protection Regulation.................................................................................... 31

3.4.2 Council of Europe Convention 108+ ......................................................................................... 32

4.0 Mitigation framework ................................................................................................... 34

/ 4

5.0 Key ethical requirements. Beyond the law, what ethical requirements can

support non-discriminatory AI? ................................................................................. 41

5.1 Ethics Guidelines for Trustworthy AI.................................................................................................... 42

5.2 The five converging ethics principles ................................................................................................... 44

5.3 Applying ethics principles .................................................................................................................... 47

List of References ................................................................................................................ 49

List of tables

Table 1: Overview of ML biases, with examples of Real-world scenario 1 (literally taken from van Giffen

et al. 2022) .......................................................................................................................................15

Table 2: EU primary anti-discrimination law ...........................................................................................................19

Table 3: EU secondary anti-discrimination law .......................................................................................................20

Table 4: Direct versus indirect discrimination in the framework of AI/ML ...............................................................23

Table 5: Overview of 24 mitigation methods for addressing biases within the CRISP-DM process phase ...........35

Table 6: Why and how to use the seven key mitigation methods (extended from van Giffen et al. 2022) ............36

Table 7: Explanation of the five ethics principles ...................................................................................................45

List of figures

Figure 1: Artificial Intelligence vs. Machine Learning vs. Deep Learning vs. Data Science ..................................... 7

Figure 2: Conceptual approach: using machine learning for predictions in real-world applications ....................... 8

Figure 3: Process phases of the CRISP-DM model ...............................................................................................10

Figure 4: When is algorithmic bias unlawful discrimination? ..................................................................................21

Figure 5: The risk-based approach in the proposed EU AI Act ..............................................................................28

Figure 6: AI Fairness checklist................................................................................................................................40

Figure 7: Geographical distribution of ethical AI guidelines ...................................................................................42

Figure 8: The three components of trustworthy AI. ................................................................................................43

Figure 9: The five converging ethics principles ......................................................................................................44

5

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

Abbreviations

AI

Artificial Intelligence

AI HLEG High-Level Expert Group on Artificial Intelligence

CAHAI Council of Europe’s Ad Hoc Committee on Artificial Intelligence

CJEU

Court of Justice of the European Union

CRISP-DM Cross-Industry-Standard-Process-for-Data-Mining

DL

Deep Learning

ECHR

European Convention on Human Rights

EU

European Union

FRAIA Fundamental Rights and Algorithms Impact Assessment

GDPR

General Data Protection Regulation

ML

Machine Learning

TEU

Treaty on European Union

TFEU

Treaty on the Functioning of the European Union

/ 6

Introduction

Automation, rule-based models and Artificial Intelligence (AI) systems are already used for risk assessment in

Belgium, Denmark, Greece, Spain, the Netherlands and other Member States, contributing to focused inspections

and tackling fraud in the domain of labour mobility and social security. However, like humans, algorithms, and in

particular machine learning (ML) algorithms, are vulnerable to biases that can make their predictions unfair and/or

discriminatory – as the Dutch child benefit scandal has indeed already proven.

As part of ELA’s support for national competent authorities and experts from the Member States in the domain of

risk assessment, it is important to understand the biases and other legal and ethical issues involved in developing

and using algorithms, automation or AI for risk assessment in the field of labour mobility and social security (for

data analysis, risk assessment, data matching and data mining, etc.).

This handbook has been prepared as a follow-up to a training session, organised on 26 May 2023, that focused

on sharing knowledge and experience regarding practical, legal and ethical issues concerning the use of machine-

learning tools embedded in AI. Additionally, an overview of the applicable EU and ECHR legal framework

pertaining to equal treatment and non-discrimination was provided. The aim of the handbook is to provide support

to the development of new knowledge and competences in the field through the following key objectives:

1) Understand the types of bias involved both when developing risk assessment tools in the field of labour

mobility and social security, and when utilising them;

2) Understand the legal, practical and ethical issues concerning the use of algorithms, automation (including

rule-based models) or Artificial Intelligence (AI) for risk assessment;

3) Provide an overview of equal treatment and non-discrimination legislation

applicable at EU and ECHR level

and relevant for the use of artificial intelligence or other algorithmic solutions, and discuss the

consequences of non-compliance with the applicable legal framework;

4) Provide overview knowledge about methods to avoid and mitigate the biases and to eliminate

discrimination in the use of algorithmic, automated or AI processes by analysts and legal professionals;

5) Illustrate the theory with practice-oriented case studies and examples.

This practical handbook is structured as follows. Section 1 presents a brief introduction on the basic functioning

of and key terminology related to algorithms, automation and artificial intelligence. Algorithms can easily be biased

or develop bias over time, which then can lead to discrimination. Biases can also amplify discrimination because

of feedback loops and redundant encoding. The concept of ‘bias’ and the different types of bias will be elaborated

in Section 2, while the complexity of bias in algorithmic decision-making will be shown through concrete examples.

More specifically, bias is analysed in the context of discrimination (as a legal and normative concept).

Discrimination can be linked to prejudices and structural inequalities enshrined in data, but may also be the result

of biased algorithmic models, interpretations or deployment. Discrimination can also take different forms. In this

context, understanding the risks and the types of legal challenges they create is key to ensuring equality and

combating discrimination. Section 3 will therefore provide an overview of how the current gender equality and

non-discrimination legislative framework in place in the EU (and internationally, within the Council of Europe)

captures and redresses algorithmic discrimination. Sections 4 and 5 will further focus on mitigation methods for

addressing the aforementioned biases and on the key ethical requirements that need to be ensured. This includes

the provision of examples of good practice (legal and non-legal solutions) for legal compliance with gender equality

law and general non-discrimination law.

7

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

1.0 Algorithms, automation and AI

1.1 Defining key concepts

This section defines an initial set of concepts that are needed to navigate the areas of algorithms, automation, and

Artificial Intelligence (AI). The first two key definitions in this context are those relating to automation and algorithm.

Automation is defined as the creation and application of technologies to produce and deliver goods and services

with minimal human intervention. The implementation of automation technologies, techniques and processes

improves the efficiency, reliability, and/or speed of many tasks that were previously performed by humans. An

algorithm, on the other hand, is a procedure used for solving a problem or performing a computation. Algorithms

act as an exact list of instructions that conduct specified actions step by step in either hardware- or software-based

routines.

In the context of automation and algorithms, four different domains are relevant. Artificial Intelligence (AI) is a

branch of computer science that deals with the automation of intelligent behaviour. It is a very wide definition, and

very often used as an umbrella definition including machine learning and deep learning.

Figure 1: Artificial Intelligence vs. Machine Learning vs. Deep Learning vs. Data Science

Machine Learning (ML) describes the processes in which algorithms learn patterns from data. Machines have

sample data that they can learn to propose new solutions. Machines learn from examples and, after a learning

phase, can generalise and propose (new) solutions (make new predictions). Machine Learning is a process that

introduces an aspect of innovation, moving beyond a deterministic learning process but instead using data to learn

new deterministic and probabilistic systems. This is of course a source of new potential, but also a threat for

discrimination. Deep Learning (DL) instead represents special procedures of machine learning, in which neural

networks are trained with data. Data science is the field of study that combines this domain expertise.

/ 8

1.2 How do they work?

Figure 2: Conceptual approach: using machine learning for predictions in real-world applications

Source: van Giffen, B., Herhausen, D., & Fahse, T. (May 2022). Overcoming the pitfalls and perils of algorithms: A classification

of machine learning biases and mitigation methods. Journal of Business Research, Vol. 144, pages 93-106,

available at: https://www.sciencedirect.com/science/article/pii/S0148296322000881.

The figure above summarises the typical application logic of ML in marketing. Data points are generated or

extracted from the relevant population which are then used to train a predefined ML model that, once completed,

can be used to make predictions which trigger marketing decisions and actions.

Example 1: Netflix and ML

For example, Netflix generates data from the viewing behaviour of all its customers, uses this data to train a

recommendation algorithm, whose predictions then trigger individual movie

and series recommendations for all

its customers.

9

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

Example 2: AI / ML applications for risk assessment

There are several potential examples of AI and ML applications for risk assessment. The first concerns the

profiling of jobseekers using machine learning to predict risk of unemployment. The so-

called job seekers

profiling is based on a logic of optimisation of resources, whereby candidates are profiled based on

a data set

that could potentially be affected by biases that are present in our society, penalising vulnerable groups

who

have more difficulties in finding employment.

Another one could be deployed to predicting the likelihood of labour contract violations.

Potential challenges relate to the non-reiteration of positions of disadvantage or discrimination of people on the

basis of race, gender, ethnicity, etc. as well as the identification of under-represented groups.

1.3 The CRISP-DM model

The CRISP-DM model is a standardised process for the development of AI/ML applications.

1

It is a standard

process in which an understanding of the data is created to solve the problem and to make a prediction for the

machine learning process.

1

The abbreviation stands for Cross-Industry-Standard-Process-for-Data-Mining. Published in 1999 to standardize data mining processes

across industries, the CRISP-DM model has since become the most common process model for data mining, data analytics, and data science

projects in various industries. See: Chapman, P., Clinton, J., Kerber, R., Khabaza, T., Reinartz, T., Shearer, C., and Wirth, R., “CRISP-DM 1.0

step-by-step data mining guide,” 2000.

/ 10

Figure 3: Process phases of the CRISP-DM model

Source: Chapman, P., Clinton, J., Kerber, R., Khabaza, T., Reinartz, T., Shearer, C., and Wirth, R., “CRISP-DM 1.0 step-by-

step data mining guide,” 2000.

Figure 3 displays the six phases of the CRISP-DM process model that can be used to plan, organise, and

implement an ML project:

1) The business understanding phase focuses on understanding the problem and defining the project goals.

2) The data understanding phase starts with initial data collection and proceeds with evaluating the data

needed to solve the problem.

3) The data preparation phase prepares the data (i.e. constructs the final dataset from the initial raw data) for

use in modelling.

4) Several ML techniques to solve the problem are then selected and developed in the modelling phase.

5) The evaluation phase evaluates the performance of the models and selects the best one.

6) The deployment phase implements the selected model in production.

The performance of the model needs to be monitored continuously so that it can be refined as needed.

11

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

Several modules are tried to optimise stochastically, as the prediction performance needs to be

evaluated, and thus an understanding of the problem is required.

Participant input 1: What AI/ML applications are you currently envisioning or using in the context of labour mobility

and social security?

1.4 How can AI and algorithms discriminate?

Examples of algorithmic bias giving rise to discrimination regularly make media headlines: face recognition

applications underperforming for female faces from racialised groups, CV-screening tools excluding female

applicants, risk assessment software systematically flagging citizens with migration background, etc. Part of the

problem of 'algorithmic discrimination' is that decision-making machine learning algorithms rely on data about

the past to make future predictions. Such predictions could amplify the main types of discrimination that have

existed in human decision-making in the past (and thus in the datasets used to train algorithms), such as race or

gender stereotypes about who is best suited to certain types of work. This mechanism is referred to as 'garbage

in garbage out', which means that if the data is biased, it is very likely that the system's output will exhibit biases

too. Yet data is not the only source of algorithmic discrimination. Biased data collection, curation, labelling,

modelling and problem framing, and biased interpretations of algorithmic output could also lead to algorithmic

discrimination. Importantly, human and machine bias interact in the socio-technical systems which algorithms are

parts of.

/ 12

Biases may then continue in the implementation of the system through so-called feedback loops. For example,

a system used to predict which areas of a city are particularly at risk of criminality could rely on past crime data

and this might embed prejudice against ethnic minorities or disadvantaged groups. In turn, the system predictions

could lead to increased police deployments and controls in that area. Over-surveillance would then confirm the

predicted higher crime rates, which leads to reinforcement loops in that area, thereby further fuelling prejudice

against racialised and socio-economically disadvantaged population groups. Importantly, it is not enough to make

a system 'blind' to certain characteristics such as race or gender because these characteristics are encoded in

multiple other data points, for example in the syntax and language used in CVs and application letters, names,

career breaks, tastes, etc. The problem of 'redundant encoding' often gives rise to proxy discrimination against

minority and disadvantaged groups.

Example 3: Examples of harm arising from algorithmic bias

Job searching platform

A first example concerns a matching platform where

job seekers can input search criteria and consult job offers.

Results can be different based on gender language in the search query. For example,

the job searching platform

offers more results when the search term is “chercheur” (

the masculine term for “researcher” in French)

compared to the search term “chercheuse” (the feminine term for “researcher” in French).

This anecdotal

example demonstrates the kind of impact that a biased distribution of valuable information

can have on the real

world.

AMS algorithm developed by Austria

A second example concerns a job seeker profiling system

developed in Austria. The system divides job seekers

into three categories: Group A - High prospects to find employment in the short term; Group B -

Mediocre

prospects, meaning job seekers not part of groups A or C; Group C - Low prospects to find employment

in the

long-term. Austria aimed to streamline resource allocation, make job search assistance more efficient

by

targeting Group B – given that group A was perceived as not problematic and that group C was considered

as

entailing a too high cost compared to the benefits. The system, however, incorporated

and affected a negative

weight to data such as gender and migration background to 'reflect the harsh reality of the labour market'.

2

This

can create a negative loop whereby discrimination patterns

in society are encoded in the distribution of valuable

resources such as training or support for labour market integration.

Fraud detection systems

The Dutch tax authorities introduced in 2013 an algorithmic system aiming to detect potentially f

raudulent

applications for child benefits. The system encoded non-Dutch citizenship as a higher risk factor

received a

higher risk score. For this reason, non-Dutch parents flagged

by the system had their benefits suspended and

were subjected to investigations and benefit recovery policies. This led to financial problems for affected families

as well as cases of mental health problems and stress. This example shows how the system de

sign

strengthened existing institutional prejudices about the link between race, ethnicity and crime.

3

2

Allhutter, D., Cech, F., Fischer, F., Grill, G., & Mager, A. (2020). Algorithmic profiling of job seekers in Austria: how austerity politics are made

effective. Frontiers in Big Data, 5.

3

“Dutch scandal serves as a warning for Europe over risks of using algorithms”, available at: https://www.politico.eu/article/dutch-scandal-

serves-as-a-warning-for-europe-over-risks-of-using-algorithms/ . See also: https://www.amnesty.org/en/latest/news/2021/10/xenophobic-

machines-dutch-child-benefit-scandal/ and https://www.europarl.europa.eu/doceo/document/O-9-2022-000028_EN.html

13

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

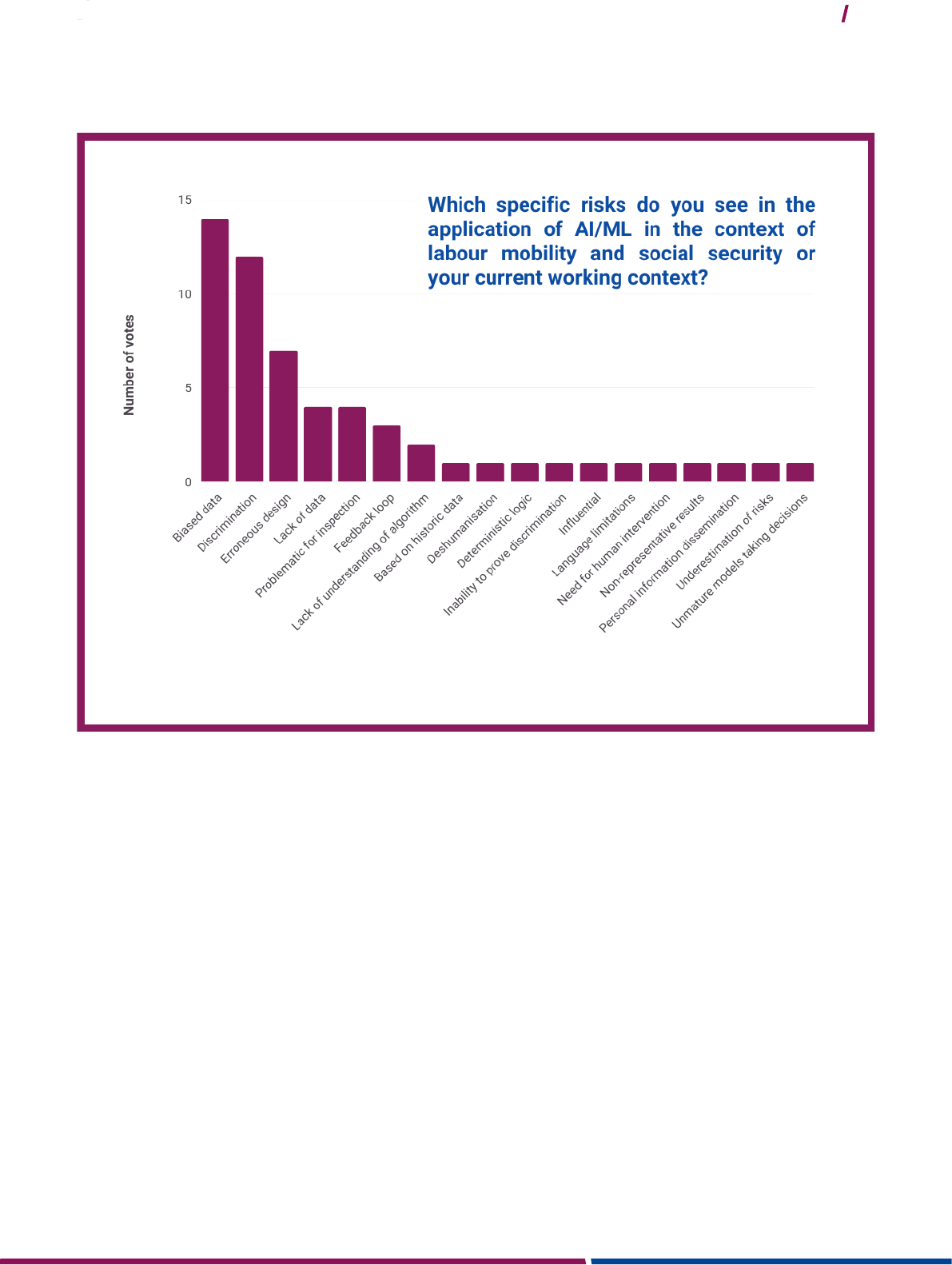

Participant input 2: Which specific risks do you see in the application of AI/ML in the context of labour mobility

and social security or your current working context?

It is important to note that not all kinds of bias are considered discriminatory from a legal point of view and therefore

legally prohibited. European fundamental rights law, anti-discrimination law, consumer protection law, data

protection and proposed AI sectoral regulation offer tools to enforce the protection against (algorithmic)

discrimination.

/ 14

2.0 Machine learning bias

2.1 Overview of machine learning biases

Machine learning bias describes an unintended or potentially harmful property of data or a model that results in

a systematic deviation of algorithmic results. Bias can then be defined as unwanted effects or results which are

evoked through a series of choices and practices in the machine learning developing process.

There are different channels and stages in which biases can be channelled into a system. Biases can already

arise in data collection: there is a lot of room for biases to enter the system, e.g. because the data collection is not

sufficiently representative, or because the data curation presents gender stereotypes. Biases can also arise when

designing a module or problem. The questions the project team asks can generate biases. If a system is developed

to select candidates with the highest leadership rates, by now it is known that this category favours men over

women, because the quality of leadership usually prefers men over women. Several researchers also pointed out

that with the same algorithm, the interpretation of the results could also be differentiated. The output could in fact

be in favour of dominant groups and not in favour of racialised groups.

Leading technology outlets consider AI and machine learning bias as a fundamental, difficult and unresolved

problem. The research question therefore is: what types of bias emerge in machine learning projects and how can

they be mitigated?

Prof. van Giffen and his colleagues conducted a systematic, problem-centred literature review which integrates

existing knowledge about ML biases and mitigation strategies into the CRISP-DM model.

4

They ended up in coding

biases into eight distinct categories and used a real-life case study to provide relevant examples of each type of

bias.

Real-world scenario 1. Two bakeries (excerpt from van Giffen et al. 2022)

The company of interest is a nationwide bakery chain, with a central production, and multiple bakeries in city

centers, train stations and villages. The company uses ML models for decision-maki

ng regarding demand

forecasting, promotions and campaigning, new product development, and their loyalty program.

The case study relates to two bakeries within this bakery chain, that operate in a different context: one is situated

in a train station of a big city and the other one is placed in a small city centre. The customers are

consequently

different: one has villagers, while the other in the train station is mainly visited by

travellers. The two bakeries

thus have a very different purchasing pattern. One centralised production facility supplies both bakeries.

The case study is not focusing on racial or ethnical discrimination, but just on the adverse economic effects

of

bias, and on the mitigation measures that could be put in place. Please b

ear in mind that this example has been

simplified for illustrative purposes.

4

For the full methodology and results, see: van Giffen et al., “Overcoming the pitfalls and perils of algorithms: A classification of machine

learning biases and mitigation methods”, Journal of Business Research, Vol. 144, May 2022, Pages 93-106, available at:

https://www.sciencedirect.com/science/article/pii/S0148296322000881

15

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

Table 1: Overview of ML biases, with examples of Real-world scenario 1 (literally taken from van Giffen et al. 2022)

Bias

Definition

Example

Social Bias

Available data reflects existing bias

in human society prior to

the

creation of the model. This is mainly

“Garbage in garbage out”,

i.e.

already existing bias

replicated

within the system and thus

reinforced.

A customer reward initiative that ignores loyal customers

The bakery implemented a customer reward initiative

that ignores loyal customers. A social bias exists when

the available data reflect existing biases in the

perception of loyalty. In this case, they want to reward

loyal customers with vouchers. Voucher recipients are

identified by average spending and frequency of visits.

Students go there very often, because their school is

close to the bakery. But they do not spend much, so the

customers do not appear as relevant and are not

recognised in the data. And they don't get a voucher.

This replicates an existing prejudice, because we

recognise the family man as a very loyal customer, and

not the students who have less money, but who go to

the bakery very often. We neglect that group of people

who do not spend much. This is a social prejudice that

we replicate in the model.

=> Social bias occurs when available data mirrors

existing biases among customer in loyalty perceptions

Measurement Bias

Chosen features and labels are

imperfect proxies for the real

variables of interest. So, the wrong

measurement is in place.

Capturing the effects of good weather

Baking cakes for a bakery is expensive. But it is even

more expensive if these cakes are not sold. The idea is to

prepare a model that helps us predict the right amount of

cakes. We use for example, the volume of previous sales,

a 30-day moving average, precipitation, and temperature.

However, precipitation is not a good proxy for good

weather, imagine if people wanted to stay home with a

slice of cake.

=> Rainfall was an unsuitable proxy for good weather to

predict the demand for cakes because the key drivers is

not the amount of rain, but the temperature.

Representation

Bias

The input data is not representative

for the real world which

leads to

systematic errors in model

predictions.

Using apples to predict oranges

Let’s roll out our “successful” prediction model to other

bakeries. The sales data of the city center locations is

extracted for training a model that is deployed in the train

station locations. The data that is generated in the train

station is not used in the prediction.

=> Representation bias emerges if the probability

distribution of the development population differs from

the true underlying distribution.

/ 16

Bias

Definition

Example

Label Bias

Labelled data systematically

deviates from the underlying truth.

5

Old wine in new bottles

The bakery has a product relaunch: the 'Wheat Bread' is

now called the new 'Wheat fitness bread', i.e. the same

bread with a different name. If the bakery staff does not

standardise the name of the bread (of the old and new

versions) in the data set, it means that the historical data

are not consistent with the newly generated data. This

causes a problem in the way the data is labelled, leading

to the creation of an unreliable data set. => Label bias

arises when training data is assigned to wrong class

labels. In this case, due to the change in product label,

the newly generated data does not match with the

historical data that serves as input to the forecast model.

Algorithmic Bias

Inappropriate technical

considerations during modelling

lead to systemic deviation of the

outcome.

As simple as possible, but not simpler

If too simple an algorithm is developed that is unable to

capture the factors that determine variations and

demands, the algorithm will not be flexible enough to

successfully predict the distribution of the real world.

=> Algorithmic bias is introduced during the modelling

phase and results

from inappropriate technical

considerations.

Evaluation Bias

A non-representative testing

population or inappropriate

performance metrics are used to

evaluate the model.

Benchmarking with caution

Normally 80 per cent of the data is used to learn the model

and once the model is learned you take the remaining 20

per cent of the data and try to benchmark it. Evaluation

bias can occur if the population of the benchmark dataset

is not representative of the usage population. The

algorithmic model is trained and optimised on the

proprietary bakery data, but is evaluated on a (non-

relevant) publicly available benchmark dataset.

Deployment Bias

The model is used, interpreted and

d

eployed in a different context than

it was built for.

Stick to the knitting

Coffee is correlated with high (but not random) average

expenditure. For customers who buy coffee, therefore,

the model is likely to recommend handing out a voucher.

Deployment bias occurs when the ML model is

inappropriately used or interpreted (even if no other bias

is present) due to e.g. human intervention: the manager

assumes a causal relationship between coffee and

average expenditure and therefore uses the model to

5

It is noted that the term “label” as used here is not necessarily the same as the term “target” variable class label, as typically used in predictive

modelling language.

17

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

Bias

Definition

Example

inappropriately justify the distribution of free coffee to

customers to stimulate spending.

Feedback Bias

The outcome of the model influences

the training data such

that a small

bias can be reinforced by a feedback

loop.

Mind the power of the algorithm

A

competing bakery is basically incentivising its

customers to rate five stars on Google Maps. The

competing bakery says: "If you rate five stars, a get a free

coffee". This causes more customers to go to that bakery

and more customers to rate 5 stars. The stimulation of 5-

star ratings during customer visits manipulates the

ranking of the competitor, which again triggers more

customer visits and hence create a reinforcing feedback

loop. It is not possible to close the gap among the two

bakeries. The same applies when policing. When you

police a neighbourhood, there are more arrests. One has

the idea that the crime rate in that neighbourhood is very

high. You overestimate the effect of the amount of crime

in that area.

=> Feedback bias can emerge when the output of the ML

model influences features that are used as new inputs. An

initially small bias is potentially reinforced through a

feedback loop.

2.2 Other examples of AI bias

Apple and Goldman Sachs

In 2019, Apple and Goldman Sachs launched the "Apple Card". The credit limit was developed using machine

learning methods. The feature “gender” had been given a powerful prediction power for creditworthiness. It was

then found that higher credit limits were granted to men than to women despite the latter having higher credit

scores.

6

We have an algorithm that is efficiently allocating credit limits, but its predictions are socially not desirable.

In this case, the following AI biases have likely caused the flawed credit limit allocation:

Social Bias: Available data of creditworthiness might reflect such a bias in human society, that has not been

identified in the creation of the model. For example, a company might use the data that it has available

opportunistically without being aware how its subsequent use might reinforce social biases that are reflected in

the data.

Evaluation Bias: The input data is not representative for the real world which leads to systematic errors in

model predictions. In this case, the data set of Goldman Sachs (predominantly males) might not have been

adequately representative of the relevant target population for its banking product.

6

Gupta, AH (2019), ‘Are Algorithms Sexist?’, The New York Times (15 November), available at: www.nytimes.com/2019/11/15/us/apple-card-

goldman-sachs.html

/ 18

Feedback Bias: The outcome of the model influences the training data such that a small bias can be reinforced

by a feedback loop.

Amazon’s algorithmic hiring prototype

Amazon employed a machine learning algorithm to filter applicants with tragic consequences given that the

recruitment algorithm filtered out female applicants.

7

This was caused by the fact that the AI was trained with CVs

of the last 10 years that were easily available, but that predominantly consisted of male. In this case, data used to

train the algorithm is outdated and distorts the predictions of the algorithm. In this case, the following AI biases

have likely caused the flawed hiring process:

Social Bias: Because Amazon has hired mostly man, the data from CVs represented mostly male applications,

which is not in line with the socially desired distribution of equality. The available data reflects existing bias in

human society prior to the creation of the model.

Representation Bias: The input data is not representative for the real world (workforce outside of Amazon)

which can further lead to systematic errors in model predictions.

Feedback Bias: The outcome of the model can also deteriorate over time when a bias with an originally small

effect is reinforced by a feedback loop.

Despite the undoubted benefits of AI / ML, AI bias occurs easily, mostly unintentionally, and it is

most often difficult to spot and distinguish.

7

Dastin, J (2018), ‘Amazon scraps secret AI recruiting tool that showed bias against women’ (10 October), available at:

www.reuters.com/article/us-amazon-com-jobs-automation-insight/amazon-scraps-secret-airecruiting-tool-that-showed-biasagainst-women-

idUSKCN1MK08G

19

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

3.0 The legal framework

The section aims to analyse the non-discrimination data protection and AI-specific legal framework at European

level. After highlighting how and why algorithmic discrimination is an issue of EU non-discrimination law, this

chapter assesses to what extent the legal framework in place is fit for purpose, and where the gaps and challenges

lie.

3.1 Anti-discrimination law: The fundamental right to non-

discrimination in Europe

This handbook does not provide legal advice or comprehensive legal guidance. National legal

frameworks and instruments, which should be taken into account when developing a system, fall

outside the scope of this handbook. Please be aware that national law may

differ from EU

provisions. In the case of discrimination for example, EU law sets minimum requirements and

Member States are free to adopt more protective legislation as long as they comply with the EU

treaties.

Treaties are the starting point for considering measures under EU law. As part of primary law, they set the

framework for the EU's actions in specific fields of competence. The body of law that derives from the principles

and objectives of the treaties is known as secondary law. The EU's legislation includes regulations, directives,

decisions, recommendations and opinions.

With regard to non-discrimination, the following key EU primary and secondary law provisions are of the utmost

importance:

Table 2: EU primary anti-discrimination law

EU Primary Law

Article 19 Treaty on the

Functioning of the European

Union (TFEU)

Allows the Council to adopt legislation to combat discrimination in relation to six

characteristics, namely sex, racial

or ethnic origin, religion or belief, disability, age,

sexual orientation.

Article 157 TFEU

Guarantees equality between men and women at work and in pay.

Article 21 of the Charter of

Fundamental Rights

Non-discrimination

1. Any discrimination based on any ground such as sex, race, colour, ethnic or

social

origin, genetic features, language, religion or belief, political or any other

opinion,

membership of a national minority, property, birth, disability, age or

sexual orientation

shall be prohibited.

2. Within the scope of application of the Treaties and without prejudice to any of their

specific provisions, any discrimination on grounds of nationality shall be prohibited.

Article 23 of the Charter of

Fundamental Rights

Equality between women and men

Equality between women and men must be ensured in all areas, including

employment,

work and pay.

/ 20

EU Primary Law

The principle of equality shall not prevent the maintenance or adoption of measures

providing for specific advantages in favour of the under-represented sex.

Table 3: EU secondary anti-discrimination law

EU Secondary Law – i.e. minimum requirements in Directives

8

Ground

Scope of application

Council Directive 2000/43/EC

implementing the principle of equal

treatment between persons

irrespective of racial or ethnic origin

race or ethnic origin

employment, goods and services,

education, social protection, including

social security and healthcare, and

social benefits

Council Directive 2000/78/EC

establishing a general framework for

equal treatment in employment and

occupation

age, religion or belief, disability, sexual

orientation

employment

Council Directive 2004/113/EC

implementing the principle of equal

treatment between men and women

in the access to and supply of goods

and services

sex goods and services

Directive 2006/54/EC on the

implementation of the principle

of

equal opportunities and equal

treatment of men and women in

matters of employment and

occupation

sex employment

At the Council of Europe level, Article 14 of the European Convention on Human Rights (ECHR) that applies to

all EU 27 Member States and to 19 other countries, affirms that: The enjoyment of the rights and freedoms set

forth in this Convention shall be secured without discrimination on any ground such as sex, race, colour, language,

religion, political or other opinion, national or social origin, association with a national minority, property, birth or

other status.

The enforcement of the ban on discrimination in EU law and the ECHR is overseen respectively by the Court of

Justice of the EU in Luxembourg and the European Court on Human Rights in Strasbourg.

8

A directive is a legal act adopted by the EU institutions directed to all Member States and it is binding as to the result to be achieved. It is up

to the single Member States to determine the form and methods to transpose in its legal framework law. The national authorities must notify

the European Commission of the measures taken. See: https://eur-lex.europa.eu/EN/legal-content/glossary/directive.html

21

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

3.2 Applying anti-discrimination law

3.2.1 When is bias unlawful discrimination?

Figure 4: When is algorithmic bias unlawful discrimination?

There are three conditions for algorithmic bias to fall within the scope of unlawful discrimination. The first

condition is linked to the personal scope of anti-discrimination law. Algorithmic bias must either harm a protected

group or result in the unfavourable treatment of people based on a protected ground, i.e. sex, racial or ethnic

origin, sexual orientation, age, disability and religion or belief. Some EU Member States have gone beyond this

personal scope and ban discrimination on a broader basis. The second condition is that algorithmic bias falls within

the material scope of anti-discrimination law. Algorithmic bias will only be legally prohibited if it falls within the

scope of application of the law, i.e. the fields of work and employment, education, the media and the sale and

consumption of goods and services. The third condition in order for bias to constitute unlawful discrimination is

that it must fall under one or two definitions: either it must result in a form of differential treatment or it must create

a disproportionate disadvantage for a protected group. Note that the directives are addressed to Member States

that must transpose their provisions into national law, which then applies to individuals, private entities such as

companies, and public bodies such as state authorities. However, the Court of Justice of the EU has interpreted

the prohibition of discrimination as directly applicable in disputes between private parties, including individuals and

companies.

9

Direct discrimination is defined in EU law as a situation in which ‘one person is treated less favourably than

another is […] in a comparable situation on any of the [protected] grounds’ [defined in the relevant directives].

10

9

See e.g. C-414/16 - Egenberger (Judgment of the Court (Grand Chamber) of 17 April 2018, Vera Egenberger v Evangelisches Werk für

Diakonie und Entwicklung e.V., ECLI:EU:C:2018:257).

10

See, e.g., Article 2(2)(a) Directive 2000/43/EC; Article 2(2)(a) Directive 2000/78/EC; Article 2(a) Directive 2004/113/EC and Article 2(1)(a)

Directive 2006/54/EC.

/ 22

Direct discrimination focuses on unfavourable treatment or differential treatment and captures situations in

which a decision is made taking into consideration a protected ground, to the disadvantage of the person or

group of persons related to that protected ground.

Example 4: A concrete example of direct discrimination

The police of an EU Member State open a call for recruitment of new staff. The vacancy is only open to male

candidates, due to their physical characteristics. This a form of direct discrimination, because it directly mentions

the protected ground of sex.

Indirect discrimination refers to situations ‘where an apparently neutral provision, criterion or practice would

put [members of a protected category] at a particular disadvantage compared with other persons, unless that

provision, criterion or practice is objectively justified by a legitimate aim and the means of achieving that aim

are appropriate and necessary’.

11

Instead of focusing on the unfavourable treatment of given groups and

individuals because of a given protected ground, the notion of indirect discrimination places the focus on the

disadvantageous effects of any given – apparently neutral – practice or measure.

Example 5:

A concrete example of indirect discrimination

The police of an EU Member State open a call for recruitment of new staff. The vacancy requires that the

candidates for a post as police officer are

at least 170 cm tall. In this case, it is not direct discrimination because

protected grounds are not explicitly mentioned, but in practice it creates a disproportionate disadvantage

for

women. As a result of the call, fewer women than men will be able to apply.

The distinction between direct and indirect discrimination has important legal consequences. There is in principle

no justification for direct discrimination, save for certain exceptions, such as genuine and determining occupational

requirements.

12

By contrast, the notion of indirect discrimination triggers an open-ended regime of justifications.

This means that a defendant can invoke justifications to be put to the consideration of a court. The Court of Justice

of the EU applies a so-called proportionality test, the aim of which is to find out whether the existence of a

disproportionate disadvantage can be justified by a measure serving a legitimate aim, appropriate to fulfil that aim,

and strictly necessary in the sense that no other less intrusive measure could have been taken to fulfil the same

purpose.

13

One particular challenge in this context is for applicants and respondents to bring evidence to support

or rebut discrimination claims. Several problems arise: machine learning models evolve when exposed to new

data, some of them are so complex that they represent so-called 'black boxes', and trade secrets and intellectual

property rights can restrict applicants' access to decision-making processes. In general, access to intelligible,

meaningful and actionable information in the context of algorithmic discrimination claims might be difficult to obtain

both for applicants and judges, but this might also be the case for defendants themselves.

11

See, e.g., Article 2(2)(b) Directive 2000/43/EC; Article 2(2)(b) Directive 2000/78/EC; Article 2(b) Directive 2004/113/EC; Article 2(1)(b)

Directive 2006/54/EC.

12

Such exceptions include, for instance, genuine and determining occupational requirements, which can be invoked as laid out in Article 4

Directive 2000/43/EC; Article 4 Directive 2000/78/EC; and Article 14(2) of Directive 2006/54/EC. Article 6 of Directive 2000/78/EC also contains

a number of exceptions to direct age discrimination.

13

Purely economic justifications are excluded from the scope of acceptable justifications in principle.

23

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

Participant input 3: Is AI more likely to produce direct or indirect discrimination?

Is AI more likely to produce direct or indirect discrimination?

According to 83% of respondents, AI is more likely to produce indirect discrimination, whereas only 17% believe

it produces direct discrimination.

Commentators have highlighted the risks of indirect discrimination posed by algorithmic decision-making and risk

assessment, in particular through proxy discrimination where protected characteristics are not directly used as

variables but where correlated datapoints encode discrimination nonetheless.

14

Recent research, however,

demonstrates that algorithmic bias can amount to direct discrimination, in particular when they lead to decisions

that exclude an entire protected group from a valuable opportunity such as a job position.

15

The two central notions

in gender equality and non-discrimination law, namely direct and indirect discrimination, have a different capacity

to adequately capture the challenges posed by machine learning models. Yet, when considering whether the use

of given variables in algorithmic systems, it is important to consider the reason for using them and the aim of the

system. For example, actively using gender as a decision variable might amount to discrimination if the system is

used to restrict access to valuable opportunities whereas it might amount to positive action if used to allocate

support or temporary benefits. The case of age illustrates this point as well: used in a system deployed to help

predict sickness, it can be a useful variable for diagnosis purposes whereas using age as a predictor of

unemployment in a system used to restrict access to labour market integration support programmes might be

discriminatory.

Table 4: Direct versus indirect discrimination in the framework of AI/ML

Direct discrimination vs indirect discrimination in the framework of AI/ML

16

Strengths

Weaknesses

Direct discrimination

It is not necessary to prove an

intention to

discriminate to show direct discrimination under

EU law, meaning that direct discrimination also

covers situations where the developers or users

of an algorithm did not intend to design a

discriminatory model, but the deployed

algorithm treats individuals and groups sharing

certain protected categories less favourably.

Direct discrimination covers situations in which

a person is treated unfavourably because he or

she belongs to a vulnerable group, without

sharing its characteristics (

discrimination by

ascription and association).

The processing of data and its

categorisation by algorithms may not be

comprehensible to the human brain. For

example, the variables and categories on

which an algorithm is based may have no

meaning for humans, in the case for

instance of mere mathematical

probabilities. Thus, there might be a

difficulty in understanding whether they

can be considered as (direct proxies for)

protected characteristics or not.

The black box nature of certain algorithms

could represent a challenge when it

comes to proving direct discrimination in

14

See e.g. Hacker, P. (2018). Teaching fairness to artificial intelligence: Existing and novel strategies against algorithmic discrimination under

EU law. Common Market Law Review, Vol. 55, Issue 4, pages 1143 – 1185.

15

Adams‐Prassl, J., Binns, R. and Kelly‐Lyth, A. (2022). Directly Discriminatory Algorithms. Modern Law Review, Vol. 86, Issue 1, pages 144-

175.

16

European Commission, Directorate-General for Justice and Consumers, Gerards, J., Xenidis, R., (2021). Algorithmic discrimination in

Europe: challenges and opportunities for gender equality and non-discrimination law, Publications Office, pages 67-73, available at:

https://data.europa.eu/doi/10.2838/544956.

/ 24

Direct discrimination vs indirect discrimination in the framework of AI/ML

16

Direct discrimination covers situations of proxy

discrimination where proxies are 'inextricably

linked' with a protected ground (e.g. pregnancy

and sex).

17

The increasing awareness of

relevant legal

obligations could lead to a reduction of direct

discrimination patterns.

Direct discrimination could decrease in the

context of algorithms, as the direct inclusion of

protected categories in the decision-making

process could produce lower predictive

accuracy. For this reason, developers aware of

these risks might remove protected categories

from the pool of available variables for

algorithmic decision making in order to avoid

direct discrimination. Yet, it might also make

sense to include these characteristics to actively

trace or combat direct discrimination

(positive action).

a trial, due to the need to establish a

comparator under EU law. If the lack of

transparency or intelligibility (black box) of

the functioning of an algorithm prevents

the gathering of evidence on how the

algorithm has treated (or

would have

treated) an individual, then the direct

discrimination may be entirely precluded.

Indirect

discrimination

Regardless of the intention of the developers, or

the company or public administration using the

AI/ML model, if the AI/ML disproportionately

disadvantages a protected group the situation

falls under the definition of indirect

discrimination.

The indirect discrimination concept focuses

mainly on the effects of any decision, measure

or policy in terms of disadvantage experienced

by protected groups.

The concept of indirect discrimination, as

interpreted by the CJEU, can adequately

address situations of discrimination by proxy,

i.e. even

in situations where the group or

individual being harmed does not possess the

protected characteristic in question.

Indirect discrimination will be difficult to

prove for individual applicants without the

support of monitoring bodies

or

organisations.

Access to group-

based data on the

potentially discriminatory effects

of

algorithmic

systems on different groups

will condition the ability to bring proof and

establish meaningful comparisons

in the

context of court proceedings.

The proportionality test that accompanies

the assessment

of cases of indirect

discrimination is open-ended. Courts

might

encounter difficulties when

assessing whether the parameters of a

n

algorithmic system are 'proportionate' i

.e.

whether they reach

the right balance in

fairness/accuracy trade-

offs or use the

right technical definition of fairness.

18

It is noted that proxy discrimination has sometimes been treated by the CJEU as a case of direct discrimination

while it has been considered indirect discrimination in other cases. For instance, pregnancy discrimination in the

17

See e.g. C-177/88 - Dekker v Stichting Vormingscentrum voor Jong Volwassenen (Judgment of the Court of 8 November 1990, Elisabeth

Johanna Pacifica Dekker v Stichting Vormingscentrum voor Jong Volwassenen (VJV-Centrum) Plus, ECLI:EU:C:1990:383).

18

Many technical definitions of fairness co-exist and some are incompatible.

25

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

Dekker case

19

was considered by the CJEU as displaying an 'inseparable link' to gender discrimination and was

therefore treated as direct discrimination. But in another case (Jyske Finans), the differential treatment of an EU

citizen based on his

birthplace was not considered a case of direct (nor indirect) discrimination on grounds of racial

or ethnic origin.

20

It is therefore difficult to identify the legal framework applicable to algorithmic proxy

discrimination, as the approach of the CJEU has not

always been consistent in the past.

3.2.2 Fairness and bias versus equality and discrimination

The notions of ‘bias’ and ‘fairness’ are grounded in statistics, computer science and ethics and

have specific meanings that are not necessarily well-suited to capturing the specific problems that

arise in relation to the law.

‘Bias’ has a much wider meaning than ‘discrimination’ as it is not only concerned with unfair errors

but with all kinds of ‘systematic’ errors, which can include those of a statistical, cognitive, societal,

structural or institutional nature.

When invoked in the particular context of ‘fairness’, ‘algorithmic bias’ refers to a particular type of

error that ‘places privileged groups at a systematic advantage and unprivileged

groups at a

systematic disadvantage’. Even though there is commonality with the legal defin

ition of

‘discrimination’, the term ‘algorithmic bias’ is more encompassing than the legal term ‘algorithmic

discrimination’ as it refers to any kind of disadvantage that could be viewed as ethically or morally

wrong. From a legal point of view, ‘algorithmic discrimination’, on the other hand, only pertains to

the unjustified unfavourable treatment of, or disadvantage experienced by, specific categories of

population protected by the law either explicitly (e.g. protected grounds) or implicitly (e.g. general

or open-textured non-discrimination clauses).

3.2.3 Gaps in and limitations of legal scope

Hierarchy of protection

Table 3 shows clearly that discrimination in relation to racial or ethnic origin is prohibited by the Racial Equality

Directive 2000/43/EC in employment matters, social protection, including social security and healthcare, social

advantages, education and the access to and supply of goods and services. The material scope of this Directive

is thus far-reaching and extends even beyond that of the gender acquis, since it also includes education.

Sex discrimination is prohibited in the realm of employment as well as in the access to goods and services. The

content of media and advertising and education are outside of the material scope of Directive 2004/113/EC. In

light of the growing use of AI in the fields concerned, these exceptions might lead to important weaknesses in

19

Case C-177/88, [12] and [17]. The case concerned the decision of an employer not to hire a female applicant because she was pregnant.

The Court indicated that 'only women can be refused employment on grounds of pregnancy and such a refusal therefore constitutes direct

discrimination on grounds of sex'. It also explained that 'whether the refusal to employ a woman constitutes direct or indirect discrimination

depends on the reason for that refusal. If that reason is to be found in the fact that the person concerned is pregnant, then the decision is

directly linked to the sex of the candidate'.

20

C-668/15 - Jyske Finans (Judgment of the Court (First Chamber) of 6 April 2017, Jyske Finans A/S v Ligebehandlingsnævnet, acting on

behalf of Ismar Huskic, ECLI:EU:C:2017:278), [20], [33]-[37]. The case concerned the request of additional proof of identity by a credit institution

for loan applicants born outside the EU, the Nordic countries, Switzerland and Liechtenstein. While the credit institution argued that this was

required under existing rules on money laundering, the applicant claimed that it was discriminatory on grounds of ethnic origin. The Court of

Justice indicated that 'the practice of a credit institution which requires a customer whose driving licence indicates a country of birth other than

a Member State of the European Union or the EFTA to produce additional identification' is 'neither directly nor indirectly connected with the

ethnic origin of the person concerned' and therefore does not give rise to either direct or indirect discrimination.

/ 26

terms of the ability of EU law to redress algorithmic discrimination against women, trans, intersex and gender non-

conforming persons.

Algorithms can easily be used in media and advertising services, and gender-based algorithmic discrimination is

plentiful in these areas. This often leads to harmful stereotyping.

21

It has been shown, for instance, that online

search results tend to reflect the gender segregation that characterises the real labour market: in the absence of

bias mitigation measures, mostly female pictures are shown when searching for a “nurse” while mostly male

pictures appear when searching for a “doctor”. These problems can be addressed at national level, but only in

those Member States that have adopted legal frameworks on the matter that can go beyond the letter of EU law.

The grounds of religion or belief, disability, age and sexual orientation are protected under another instrument,

Directive 2000/78/EC, which, unlike the Racial Equality Directive, only applies to employment matters. As a result,

under EU secondary law, discrimination on grounds of religion or belief, disability, age and sexual orientation is

not prohibited in relation to education, social security, and access to goods and services including healthcare,

housing, advertising and the media. This problem is well known among discrimination lawyers and has been

referred to as constituting an undue ‘hierarchy’ of grounds in EU non-discrimination law.

This ‘hierarchy of grounds’ that characterises EU non-discrimination legislation is highly problematic. Indeed,

algorithmic discrimination is likely to arise in areas where only race and gender equality are protected, and in

particular in the market for goods and services. Although ML/AI discrimination is very likely to happen in the market

of good and services, EU law does not protect EU citizens against algorithmic discrimination in this area, meaning

that certain groups can be lawfully excluded from the access of certain goods and services, charged higher prices

or be targeted by discriminatory advertising on online platforms.

ML algorithms ar

e also increasingly used in the field of education. The lack of EU legal guarantees against

discrimination on the grounds of gender, age, disability, sexual orientation and religion is therefore problematic in

this field. In the area of AI/ML, it leads to a reiteration of a negative loop in the under-representation of women and

minority groups in the curricula related to IT, software development and sciences, which leads to under-

representation and discrimination at a later stage in the labour market.

22

Intersectional discrimination

Another important aspect to consider is the limitations of the EU legal framework in relation to intersectional

discrimination. According to the Gender Shades project

23

, facial recognition software of large commercial platforms

is biased against several groups, but especially against dark-skinned women. This is a case of discrimination on

two intersecting protected grounds, gender and race, called intersectional discrimination. Inherent in the notion of

intersectional discrimination is the fact that the discriminatory harm might not exist in relation to a sole protected

ground taken in isolation, but rather only in relation to a combination of protected grounds.

European non-discrimination laws do not fully recognise intersectional discrimination, as illustrated by the decision

of the Court of Justice of the European Union (CJEU) in the Parris case

24

. In its decision in Parris, the CJEU on

the one hand recognised the existence of multiple discrimination, stating that ‘discrimination may indeed be based

on several of the grounds’ protected under EU law, but on the other hand it rejected a finding of intersectional

discrimination, declaring that 'there is [...] no new category of discrimination resulting from the combination of more

21

Stereotyping can be harmful for various reasons, for instance when undermining dignity, preventing access to certain goods, services or

social recognition, maintaining gender segregation by prescribing certain roles and maintaining given expectations, etc. See e.g. Timmer, A.

(2016). Gender Stereotyping in the Case Law of the EU Court of Justice. European Equality Law Review, Issue 1, p. 38-9.

22

Gerards & Xenidis (2021).

23

Buolamwini, J. & Gebru, T. (2018). Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification. In Sorelle A.

Friedler, Christo Wilson, editors, Conference on Fairness, Accountability and Transparency, FAT 2018, 23-24 February 2018, New York, NY,

USA. Volume 81 of Proceedings of Machine Learning Research, pages 77-91, PMLR, 2018.

24

C-443/15 - Parris (Judgment of the Court (First Chamber) of 24 November 2016, David L. Parris v Trinity College Dublin and Others,

ECLI:EU:C:2016:897).

27

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

than one of those grounds...' where discrimination based on each protected ground taken in isolation cannot be

proven. However, intersectional discrimination may be covered by those Member States that have decided to go

beyond EU law.

The lack of redress for intersectional discrimination in EU law – despite the recognition of the issue of ‘multiple

discrimination’ in Directives 2000/78/EC and 2000/43/EC – is particularly problematic in light of the increasing risks

of intersectional discrimination linked to the granular profiling abilities of algorithmic systems fed by pervasive data

mining and data brokering: it will be rare for an algorithmic system to discriminate only on the basis of a protected

ground, since it will usually base its output on a multitude of different factors and variables that are all statistically

correlated. The focus on a few protected grounds and the lack of proper legal recognition of intersectional

discrimination in the current EU and national legislation means that such instances of ‘combined’ or highly

differentiated discrimination cannot be effectively redressed.

Further, as there is no available data on intersectional di

scrimination, this specific type of discrimination is difficult

to test and detect. Intersectional discrimination can create feedback loops, leading to exclusion and invisibility of

vulnerable groups. When an AI/ML system is tested, it is important to test it also in the intersection between the

different grounds.

Emergent patterns of discrimination?

Algorithmic discrimination challenges the current boundaries of EU non-discrimination law. Even though Article 21

of the Charter of Fundamental Rights establishes a non-exhaustive and open-ended list of discrimination grounds

by prohibiting discrimination ‘based on any ground such as’ the characteristics listed, the CJEU curtailed the

potential of Article 21 as a basis for introducing more flexibility in the personal scope of EU secondary equality and

non-discrimination law.

25

The exhaustive nature of the list of protected grounds in EU law and the limits put by the

CJEU to their expansive interpretation raise problems in relation to proxy discrimination, an issue that is particularly

acute in respect of algorithms. Therefore, an issue arises with the emergence of new patterns of discrimination,

such as social origin. EU secondary law does not protect all groups which are at risk of social sorting or algorithmic

exclusion from discrimination. While in EU primary law, the open-ended clause of Article 21 of the Charter of

Fundamental Rights protects social origin as a ground of discrimination, the CJEU has adopted in the FOA case

a very restrictive approach on new emergent patterns of discrimination by excluding an extension protection by

analogy.

26

Improving the quality of an AI/ML model can be used as a legal argument in the context of e.g. a

proportionality test under European discrimination law. However, the improvement of an already

pre-

existing practice does not in any way guarantee that the deployment of an AI/ML model

practice will be accepted by the court as having some information value. It cannot be concluded

whether such improvement will suffice to meet the necessity threshold applied by e.g. the Court

of Justice. Such a decision is highly context-dependent and simply cannot be predicted. In other

terms, it is not clear whether a court might consider ‘the relative improvement of the situation’

(comparing a situation where age cut-off points are used with a situation with the use of more

evolved ML techniques) as meeting the necessity criteria if the system produces ‘disproportionate

disadvantage’ against a protected group.

EU discrimination law does not explicitly give

consideration to things like ‘relative improvement’ compared to a pre-existing scheme. It is hereby

to be taken into account that, whether the discrimination is ‘inadvertent’ does not matter under EU

discrimination law, i.e. no intention is required to qualify for discrimination.

25

See e.g. C-354/13 - FOA (Judgment of the Court (Fourth Chamber), 18 December 2014, Fag og Arbejde (FOA) v Kommunernes

Landsforening (KL), ECLI:EU:C:2014:2463).

26

Case C‐354/13.

/ 28

3.3 AI Sectoral Regulation. Taking stock of European

developments

Since March 2018, the European Union has put Artificial Intelligence on the political agenda. The 2021 review of

the Coordinated Plan on AI outlines a vision to accelerate, act, and align priorities with the current European and

global AI landscape and bring AI strategy into action. The European AI Strategy aims at making the EU a world-

class hub for AI and ensuring that AI is human-centric and trustworthy. The Commission has proposed two inter-

related legal initiatives that will contribute to building trustworthy AI, while the Council of Europe wants to ensure

that human rights, democracy and the rule of law are protected and promoted in the digital environment.

3.3.1 EU AI Act (proposed)

The Proposal for a Regulation laying down harmonised rules on artificial intelligence (EU AI Act) is the

Commission’s first-ever legal framework on AI and aims at addressing the risks of AI while positioning Europe.

The proposed EU AI Act categorises the risks of specific uses of AI into four different levels: unacceptable risk,

high risk, limited risk, and minimal risk.

Figure 5: The risk-based approach in the proposed EU AI Act

Source: https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

For the high-risk category, the proposed Regulation sets binding provisions for systems that are particularly at risk

of endangering fundamental rights.

The proposed EU AI Act addresses discrimination (mainly in its preamble) and algorithmic bias:

Explanatory memorandum & recital 28: when classifying an AI system as high-risk, it is of particular relevance

to consider ‘[t]he extent of the adverse impact caused by the AI system on the fundamental rights protected by

the Charter' including ‘non-discrimination’ (Art. 21 EUCFR) and ‘equality between women and men’ (Art. 23

EUCFR)

29

HANDBOOK ON ETHICAL ISSUES RELATED TO ALGORITHMS, AUTOMATION AND AI

Recitals 35, 36 and 37 warn that AI systems used in core sectors such as education, employment and essential

services are liable to ‘violate [...] the right not to be discriminated against' and 'perpetuate historical patterns of

discrimination’.

Recital 44 explicitly refers to non-discrimination law when stressing the importance of high-quality data

requirements to ensure that a high-risk AI system ‘does not become the source of discrimination prohibited by

Union law’.

What does it mean for national authorities in the domain of labour mobility and social security?

Recital 37 on the one hand affirms that “Natural persons applying for or receiving essential public assistance

benefits and services from public authorities are typically dependent on those benefits and services and in a

vulnerable position in relation to the responsible authorities. If AI systems are used for determining whether such

benefits and services should be denied, reduced, revoked or reclaimed by authorities, including whether

beneficiaries are legitimately entitled to such benefits or services, those systems may have a significant impact on

persons’ livelihood and may infringe their fundamental rights, such as the right to social protection, non-

discrimination, human dignity or an effective remedy. Those systems should therefore be classified as high-risk”.

This means that an AI/ML module in the area of labour mobility or social security is likely to be classified as a high-

risk system. Nonetheless, the same Recital 37 affirms that “this Regulation should not hamper the development

and use of innovative approaches in the public administration, which would stand to benefit from a wider use of

compliant and safe AI systems, provided that those systems do not entail a high risk to legal and natural persons”.

In this context, Article 10 of the proposed Act on data and data governance is particularly relevant.

Article 10 of the proposed EU AI Act is particularly relevant when it comes to non-discrimination

Article 10 - Data and data governance